hugo-teek is loading...

kubectl使用

最后更新于:

kubectl使用

目录

[toc]

1、kubectl命令自动补全

1kubectl工具自动补全:

2source <(kubectl completion bash) (依赖软件包 bash-completion)

🍀 1、centos下安装方式

1#安装软件包

2yum install -y epel-release bash-completion

3

4#执行命令

5source /usr/share/bash-completion/bash_completion

6

7source <(kubectl completion bash)

8echo "source <(kubectl completion bash)" >> ~/.bashrc

9source ~/.bashrc

🍀 2、ubuntu下安装方式

1apt install bash-completion

2source <(kubectl completion bash)

2、kubectl使用的连接k8s认证文件

💘 实战:kubectl使用的连接k8s认证文件测试实验(测试成功)-20211021

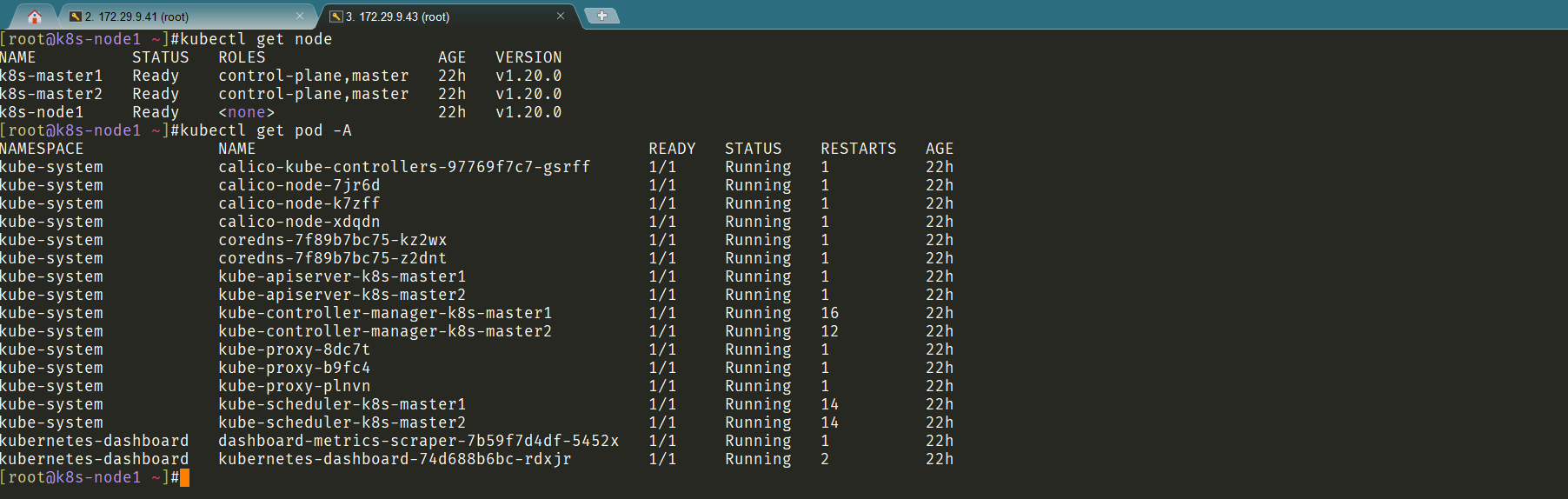

实验环境

1实验环境:

21、win10,vmwrokstation虚机;

32、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

4 k8s version:v1.21

5 CONTAINER-RUNTIME:docker://20.10.7

1、测试过程

现在开始测试:

master节点上关于认证配置文件位置如下:

1[root@k8s-master1 ~]#ll /etc/kubernetes/admin.conf

2-rw------- 1 root root 5564 Oct 20 15:55 /etc/kubernetes/admin.conf

3[root@k8s-master1 ~]#ll .kube/config

4-rw------- 1 root root 5564 Oct 20 15:56 .kube/config

5[root@k8s-master1 ~]#

node节点上之前是有安装的kubectl工具的,但是它无法查看集群信息:

1[root@k8s-node1 ~]#kubectl get po

2The connection to the server localhost:8080 was refused - did you specify the right host or port?

3[root@k8s-node1 ~]#

再看下node节点相应目录下是否存在认证文件:=》都不存在!

1[root@k8s-node1 ~]#ll /etc/kubernetes/

2kubelet.conf manifests/ pki/

3[root@k8s-node1 ~]#ll .kube

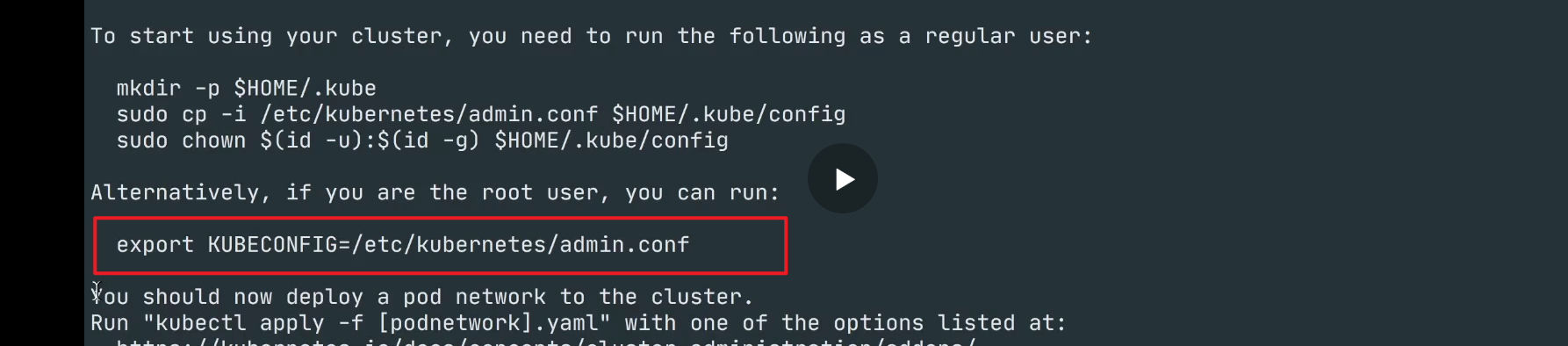

下面这个命令是在k8s集群搭建过程中配置kubectl使用的连接k8s认证文件的方法:

拷贝kubectl使用的连接k8s认证文件到默认路径:

1mkdir -p $HOME/.kube

2sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

3sudo chown $(id -u):$(id -g) $HOME/.kube/config

接下来我们把master的这个config文件给传送到node节点并做相应的配置:

1#在master节点scp这个认证文件

2[root@k8s-master1 ~]#scp .kube/config root@172.29.9.43:/etc/kubernetes/

3config 100% 5564 1.7MB/s 00:00

4[root@k8s-master1 ~]#

5

6#在node节点开始配置

7mkdir -p $HOME/.kube

8sudo cp -i /etc/kubernetes/config $HOME/.kube/config

9sudo chown $(id -u):$(id -g) $HOME/.kube/config

测试在node节点是否可以访问k8s集群:=》是可以的,测试完美!

2、实验总结

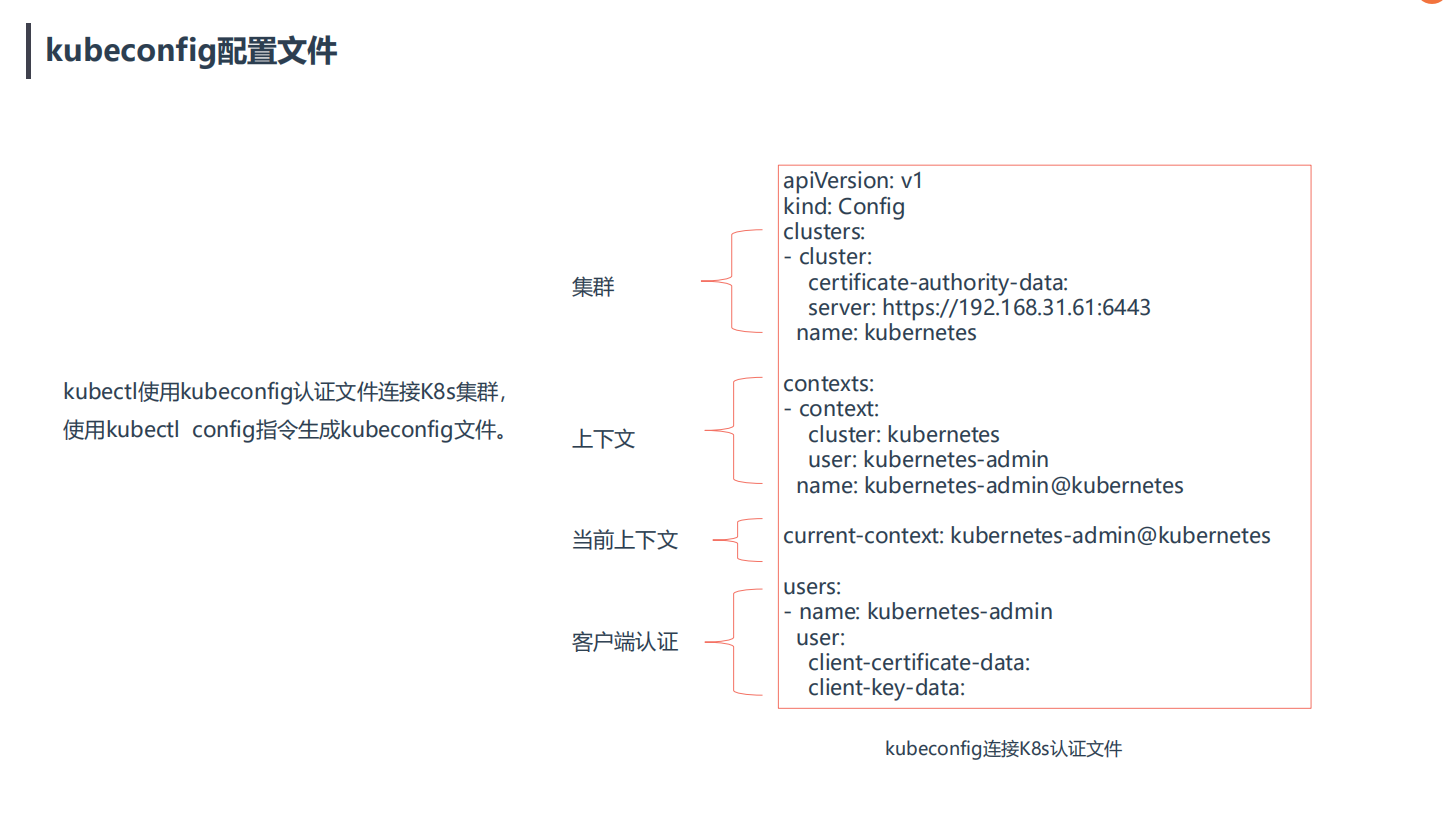

1.kubeconfig配置文件

kubectl使用kubeconfig认证文件连接K8s集群,使用kubectl config指令生成kubeconfig文件。

1kubeconfig连接K8s认证文件

2

3apiVersion: v1

4kind: Config

5clusters:

6- cluster:

7 certificate-authority-data:

8 server: https://192.168.31.61:6443

9 name: kubernetes

10

11contexts:

12- context:

13 cluster: kubernetes

14 user: kubernetes-admin

15 name: kubernetes-admin@kubernetes

16current-context: kubernetes-admin@kubernetes

17

18users:

19- name: kubernetes-admin

20 user:

21 client-certificate-data:

22 client-key-data:

2.方法:拷贝kubectl使用的连接k8s认证文件到默认路径

1mkdir -p $HOME/.kube

2sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

3sudo chown $(id -u):$(id -g) $HOME/.kube/config

1[root@k8s-master1 ~]#ll /etc/kubernetes/admin.conf

2-rw------- 1 root root 5564 Oct 20 15:55 /etc/kubernetes/admin.conf

3[root@k8s-master1 ~]#ll .kube/config

4-rw------- 1 root root 5564 Oct 20 15:56 .kube/config

5[root@k8s-master1 ~]#

- 注意:也可以设置环境变量

3.注意:各种搭建方式下kubeconfig文件的生成方式

1.单master集群:

1当时直接执行`kubeadm init`即可自动生成admin.conf文件,

2命令完成后只需要我们执行copy操作即可:

3mkdir -p $HOME/.kube

4sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

5sudo chown $(id -u):$(id -g) $HOME/.kube/config

2.高可用k8s集群:

1生成初始化配置文件:

2cat > kubeadm-config.yaml << EOF

3apiVersion: kubeadm.k8s.io/v1beta2

4bootstrapTokens:

5- groups:

6 - system:bootstrappers:kubeadm:default-node-token

7 token: 9037x2.tcaqnpaqkra9vsbw

8 ttl: 24h0m0s

9 usages:

10 - signing

11 - authentication

12kind: InitConfiguration

13localAPIEndpoint:

14 advertiseAddress: 172.29.9.41

15 bindPort: 6443

16nodeRegistration:

17 criSocket: /var/run/dockershim.sock

18 name: k8s-master1

19 taints:

20 - effect: NoSchedule

21 key: node-role.kubernetes.io/master

22---

23apiServer:

24 certSANs: # 包含所有Master/LB/VIP IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

25 - k8s-master1

26 - k8s-master2

27 - 172.29.9.41

28 - 172.29.9.42

29 - 172.29.9.88

30 - 127.0.0.1

31 extraArgs:

32 authorization-mode: Node,RBAC

33 timeoutForControlPlane: 4m0s

34apiVersion: kubeadm.k8s.io/v1beta2

35certificatesDir: /etc/kubernetes/pki

36clusterName: kubernetes

37controlPlaneEndpoint: 172.29.9.88:16443 # 负载均衡虚拟IP(VIP)和端口

38controllerManager: {}

39dns:

40 type: CoreDNS

41etcd:

42 external: # 使用外部etcd

43 endpoints:

44 - https://172.29.9.41:2379 # etcd集群3个节点

45 - https://172.29.9.42:2379

46 - https://172.29.9.43:2379

47 caFile: /opt/etcd/ssl/ca.pem # 连接etcd所需证书

48 certFile: /opt/etcd/ssl/server.pem

49 keyFile: /opt/etcd/ssl/server-key.pem

50imageRepository: registry.aliyuncs.com/google_containers # 由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址

51kind: ClusterConfiguration

52kubernetesVersion: v1.20.0 # K8s版本,与上面安装的一致

53networking:

54 dnsDomain: cluster.local

55 podSubnet: 10.244.0.0/16 # Pod网络,与下面部署的CNI网络组件yaml中保持一致

56 serviceSubnet: 10.96.0.0/12 # 集群内部虚拟网络,Pod统一访问入口

57scheduler: {}

58EOF

59

60使用配置文件引导:

61kubeadm init --config kubeadm-config.yaml

62[root@k8s-master1 ~]#kubeadm init --config kubeadm-config.yaml

63[init] Using Kubernetes version: v1.20.0

64[preflight] Running pre-flight checks

65 [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

66 [WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.9. Latest validated version: 19.03

67[preflight] Pulling images required for setting up a Kubernetes cluster

68[preflight] This might take a minute or two, depending on the speed of your internet connection

69[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

70[certs] Using certificateDir folder "/etc/kubernetes/pki"

71[certs] Generating "ca" certificate and key

72[certs] Generating "apiserver" certificate and key

73[certs] apiserver serving cert is signed for DNS names [k8s-master1 k8s-master2 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.29.9.41 172.29.9.88 172.29.9.42 127.0.0.1]

74[certs] Generating "apiserver-kubelet-client" certificate and key

75[certs] Generating "front-proxy-ca" certificate and key

76[certs] Generating "front-proxy-client" certificate and key

77[certs] External etcd mode: Skipping etcd/ca certificate authority generation

78[certs] External etcd mode: Skipping etcd/server certificate generation

79[certs] External etcd mode: Skipping etcd/peer certificate generation

80[certs] External etcd mode: Skipping etcd/healthcheck-client certificate generation

81[certs] External etcd mode: Skipping apiserver-etcd-client certificate generation

82[certs] Generating "sa" key and public key

83[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

84[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

85[kubeconfig] Writing "admin.conf" kubeconfig file

86[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

87[kubeconfig] Writing "kubelet.conf" kubeconfig file

88[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

89[kubeconfig] Writing "controller-manager.conf" kubeconfig file

90[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

91[kubeconfig] Writing "scheduler.conf" kubeconfig file

92[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

93[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

94[kubelet-start] Starting the kubelet

95[control-plane] Using manifest folder "/etc/kubernetes/manifests"

96[control-plane] Creating static Pod manifest for "kube-apiserver"

97[control-plane] Creating static Pod manifest for "kube-controller-manager"

98[control-plane] Creating static Pod manifest for "kube-scheduler"

99[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

100[apiclient] All control plane components are healthy after 17.036041 seconds

101[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

102[kubelet] Creating a ConfigMap "kubelet-config-1.20" in namespace kube-system with the configuration for the kubelets in the cluster

103[upload-certs] Skipping phase. Please see --upload-certs

104[mark-control-plane] Marking the node k8s-master1 as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

105[mark-control-plane] Marking the node k8s-master1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

106[bootstrap-token] Using token: 9037x2.tcaqnpaqkra9vsbw

107[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

108[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

109[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

110[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

111[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

112[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

113[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

114[addons] Applied essential addon: CoreDNS

115[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

116[addons] Applied essential addon: kube-proxy

117

118Your Kubernetes control-plane has initialized successfully!

119

120To start using your cluster, you need to run the following as a regular user:

121

122 mkdir -p $HOME/.kube

123 sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

124 sudo chown $(id -u):$(id -g) $HOME/.kube/config

125

126Alternatively, if you are the root user, you can run:

127

128 export KUBECONFIG=/etc/kubernetes/admin.conf

129

130You should now deploy a pod network to the cluster.

131Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

132 https://kubernetes.io/docs/concepts/cluster-administration/addons/

133

134You can now join any number of control-plane nodes by copying certificate authorities

135and service account keys on each node and then running the following as root:

136

137 kubeadm join 172.29.9.88:16443 --token 9037x2.tcaqnpaqkra9vsbw \

138 --discovery-token-ca-cert-hash sha256:b83d62021daef2cd62c0c19ee0f45adf574c2eaf1de28f0e6caafdabdf95951d \

139 --control-plane

140

141Then you can join any number of worker nodes by running the following on each as root:

142

143kubeadm join 172.29.9.88:16443 --token 9037x2.tcaqnpaqkra9vsbw \

144 --discovery-token-ca-cert-hash sha256:b83d62021daef2cd62c0c19ee0f45adf574c2eaf1de28f0e6caafdabdf95951d

4.问题:node 节点上需要admin.conf吗?

回答:

1node节点如果不执行kubectl工具,就不需要admin.conf,只在master节点,就可以完成集群维护工作了;

2

3只要有admin.conf,不仅仅是在node节点访问api-server,在任何网络可达的电脑上都可以访问api-server;

4你需要用到 kubectl 才需要admin.conf配置,那么多节点上 都可以运行这个命令 安全上也会存在问题;

5

6kubectl就是k8s集群的客户端,可以把这个客户端放在k8s的master节点机器,也可以放在node节点机器,应该也可以放在集群之外,只需要有地址和认证就可以了,类似于mysql客户端和mysql服务器。

7

8kubectl 需要配置认证信息与apiserver 去通信,然后保存在etcd里面;

9.kube/config 文件就是通信认证文件,kubectl通过认证文件调用apiserver,apiserver 再去调用etcd 里面的数据存储,你kubectl get看到的都是etcd的;

10

11是apiserver 去调用etcd 的数据;

12其实就是一个查询;

3、kubectl官方文档参考地址

https://kubernetes.io/zh/docs/reference/kubectl/overview/

关于我

我的博客主旨:

- 排版美观,语言精炼;

- 文档即手册,步骤明细,拒绝埋坑,提供源码;

- 本人实战文档都是亲测成功的,各位小伙伴在实际操作过程中如有什么疑问,可随时联系本人帮您解决问题,让我们一起进步!

🍀 微信二维码 x2675263825 (舍得), qq:2675263825。

🍀 微信公众号 《云原生架构师实战》

🍀 网站

🍀 语雀

https://www.yuque.com/xyy-onlyone

🍀 csdn https://blog.csdn.net/weixin_39246554?spm=1010.2135.3001.5421

🍀 知乎 https://www.zhihu.com/people/foryouone

最后

好了,关于本次就到这里了,感谢大家阅读,最后祝大家生活快乐,每天都过的有意义哦,我们下期见!

1

📡

👤

作者:

余温Gueen

🌐

版权:

本站文章除特别声明外,均采用

CC BY-NC-SA 4.0

协议,转载请注明来自

余温Gueen Blog!

推荐使用微信支付

推荐使用支付宝