最小化微服务漏洞

最小化微服务漏洞

目录

[toc]

本节实战

| 实战名称 |

|---|

| 💘 案例:设置容器以普通用户运行-2023.5.29(测试成功) |

| 💘 案例:避免使用特权容器,选择使用capabilities-2023.5.30(测试成功) |

| 💘 案例:只读挂载容器文件系统-2023.5.30(测试成功)== |

| 案例1:禁止创建特权模式的Pod |

| 示例2:禁止没指定普通用户运行的容器 |

| 💘 实战:部署Gatekeeper-2023.6.1(测试成功) |

| 💘 案例1:禁止容器启用特权-2023.6.1(测试成功) |

| 💘 案例2:只允许使用特定的镜像仓库-2023.6.1(测试成功) |

| 💘 实战:gVisor与Docker集成-2023.6.2(测试成功) |

| 💘 实战:gVisor与Containerd集成-2023.6.2(测试成功) |

| 💘 实战:K8s使用gVisor运行容器-2023.6.3(测试成功) |

1、Pod安全上下文

**安全上下文(Security Context):**K8s对Pod和容器提供的安全机制,可以设置Pod特权和访问控制。

安全上下文限制维度:

• 自主访问控制(Discretionary Access Control):基于用户ID(UID)和组ID(GID),来判定对对象(例如文件)的访问权限。

• 安全性增强的 Linux(SELinux): 为对象赋予安全性标签。

• 以特权模式或者非特权模式运行。

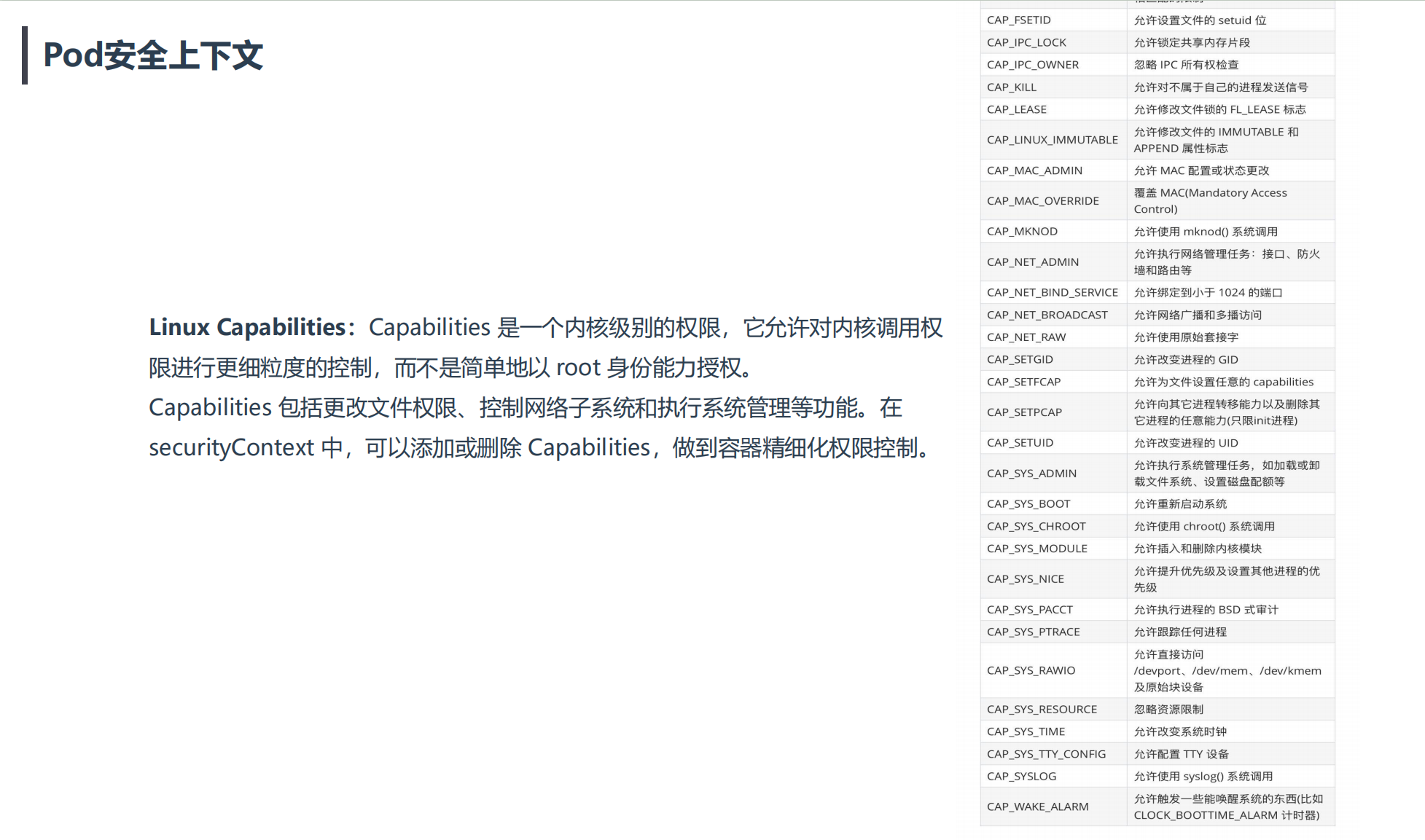

• Linux Capabilities: 为进程赋予 root 用户的部分特权而非全部特权。

• AppArmor:定义Pod使用AppArmor限制容器对资源访问限制

• Seccomp:定义Pod使用Seccomp限制容器进程的系统调用

• AllowPrivilegeEscalation: 禁止容器中进程(通过 SetUID 或 SetGID 文件模式)获得特权提升。当容器以特权模式运行或者具有CAP_SYS_ADMIN能力时,AllowPrivilegeEscalation总为True。

• readOnlyRootFilesystem:以只读方式加载容器的根文件系统。

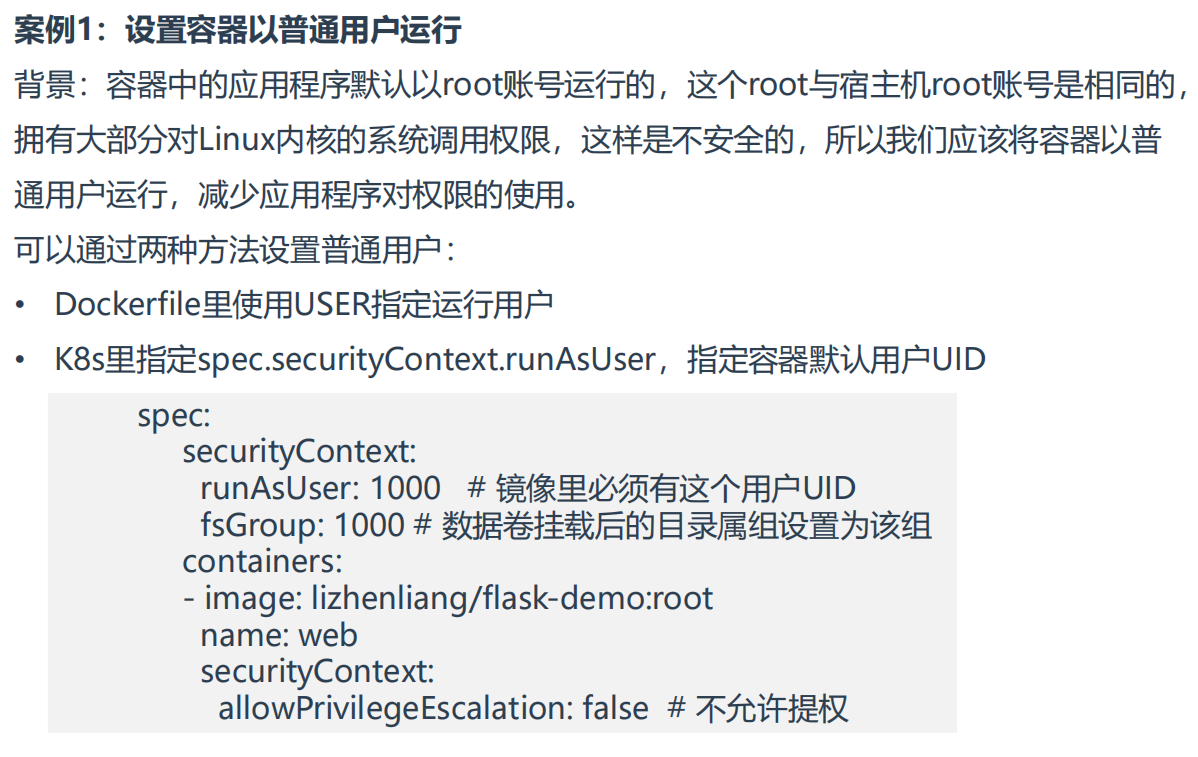

案例1:设置容器以普通用户运行

==💘 案例:设置容器以普通用户运行-2023.5.29(测试成功)==

- 实验环境

1实验环境:

21、win10,vmwrokstation虚机;

32、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

4 k8s version:v1.20.0

5 docker://20.10.7

- 实验软件

1链接:https://pan.baidu.com/s/1mmw8OulDE98CqIy8oMbaOg?pwd=0820

2提取码:0820

32023.5.29-securityContext-runAsUser-code

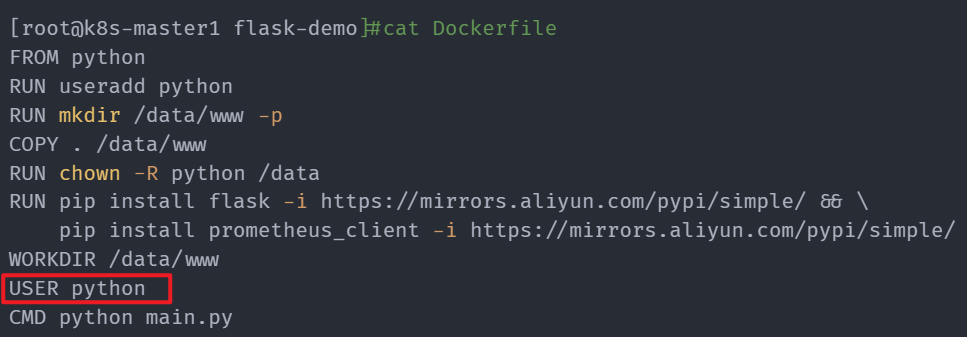

方法1:Dockerfile里使用USER指定运行用户

- 上传软件并解压

1[root@k8s-master1 ~]#ll -h flask-demo.zip

2-rw-r--r-- 1 root root 1.3K May 29 06:33 flask-demo.zip

3[root@k8s-master1 ~]#unzip flask-demo.zip

4Archive: flask-demo.zip

5 creating: flask-demo/

6 creating: flask-demo/templates/

7 inflating: flask-demo/templates/index.html

8 inflating: flask-demo/main.py

9 inflating: flask-demo/Dockerfile

10[root@k8s-master1 ~]#cd flask-demo/

11[root@k8s-master1 flask-demo]#ls

12Dockerfile main.py templates

- 查看相关文件内容

1[root@k8s-master1 flask-demo]#pwd

2/root/flask-demo

3[root@k8s-master1 flask-demo]#ls

4Dockerfile main.py templates

5

6[root@k8s-master1 flask-demo]#cat templates/index.html #将要渲染的文件

7<!DOCTYPE html>

8<html lang="en">

9<head>

10 <meta charset="UTF-8">

11 <title>首页</title>

12</head>

13<body>

14

15<h1>Hello Python!</h1>

16

17</body>

18</html>

19

20[root@k8s-master1 flask-demo]#cat main.py

21from flask import Flask,render_template

22

23app = Flask(__name__)

24

25@app.route('/')

26def index():

27 return render_template("index.html")

28

29if __name__ == "__main__":

30 app.run(host="0.0.0.0",port=8080) #程序用的是8080端口

31

32[root@k8s-master1 flask-demo]#cat Dockerfile

33FROM python

34RUN useradd python

35RUN mkdir /data/www -p

36COPY . /data/www

37RUN chown -R python /data

38RUN pip install flask -i https://mirrors.aliyun.com/pypi/simple/ && \

39 pip install prometheus_client -i https://mirrors.aliyun.com/pypi/simple/

40WORKDIR /data/www

41USER python

42CMD python main.py

- 构建镜像

1[root@docker flask-demo]#docker build -t flask-demo:v1 .

2[root@docker flask-demo]#docker images|grep flask

3flask-demo v1 a9cabb6241ed 22 seconds ago 937MB

- 启动一个容器

1[root@docker flask-demo]#docker run -d --name demo-v1 -p 8080:8080 flask-demo:v1

2f3d7173e84ad6242d3c037e33a2ff0d654550bcac970534777188092e173dc6e

3[root@docker flask-demo]#docker ps -l

4CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5f3d7173e84ad flask-demo:v1 "/bin/sh -c 'python …" 41 seconds ago Up 40 seconds 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp demo-v1

http://172.29.9.65:8080/

- 进入到容器里,可以看到运行次程序的容器用户是python:

1[root@docker flask-demo]#docker exec -it demo-v1 bash

2python@f3d7173e84ad:/data/www$ id

3uid=1000(python) gid=1000(python) groups=1000(python)

4python@f3d7173e84ad:/data/www$ id python

5uid=1000(python) gid=1000(python) groups=1000(python)

6python@f3d7173e84ad:/data/www$ ps -ef

7UID PID PPID C STIME TTY TIME CMD

8python 1 0 0 04:30 ? 00:00:00 /bin/sh -c python main.py

9python 7 1 0 04:30 ? 00:00:00 python main.py

10

11#在宿主机上查看容器进程,也是uid为1000的普通用户:

12[root@docker flask-demo]#ps -ef|grep main

131000 80085 80065 0 12:30 ? 00:00:00 /bin/sh -c python main.py

141000 80114 80085 0 12:30 ? 00:00:00 python main.py

- 如果我们把Dockerfile里的

USER python去掉,我们再启动一个容器看下效果,容器默认就是以root身份来运行程序的

1[root@docker flask-demo]#vim Dockerfile

2FROM python

3RUN useradd python

4RUN mkdir /data/www -p

5COPY . /data/www

6RUN chown -R python /data

7RUN pip install flask -i https://mirrors.aliyun.com/pypi/simple/ && \

8 pip install prometheus_client -i https://mirrors.aliyun.com/pypi/simple/

9WORKDIR /data/www

10#USER python

11CMD python main.py

12

13

14# 构建

15[root@docker flask-demo]#docker build -t flask-demo:v2 .

16

17#启动容器

18[root@docker flask-demo]#docker run -d --name demo-v2 -p 8081:8080 flask-demo:v2

19ce527b2bb4b59b28a3b5bb6fb50c4de4d0a5183eaafdb5631d866025af01a319

20[root@docker flask-demo]#docker ps -l

21CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

22ce527b2bb4b5 flask-demo:v2 "/bin/sh -c 'python …" 4 seconds ago Up 3 seconds 0.0.0.0:8081->8080/tcp, :::8081->8080/tcp demo-v2

23

24#查看容器内程序用户:

25[root@docker flask-demo]#docker exec -it demo-v2 bash

26root@ce527b2bb4b5:/data/www# id

27uid=0(root) gid=0(root) groups=0(root)

28root@ce527b2bb4b5:/data/www# id python

29uid=1000(python) gid=1000(python) groups=1000(python)

30root@ce527b2bb4b5:/data/www# ps -ef

31UID PID PPID C STIME TTY TIME CMD

32root 1 0 0 04:41 ? 00:00:00 /bin/sh -c python main.py

33root 7 1 0 04:41 ? 00:00:00 python main.py

34

35#查看宿主机次容器进程用户

36[root@docker flask-demo]#ps -ef|grep main

371000 80085 80065 0 12:30 ? 00:00:00 /bin/sh -c python main.py

381000 80114 80085 0 12:30 ? 00:00:00 python main.py

39root 80866 80847 0 12:41 ? 00:00:00 /bin/sh -c python main.py

40root 80896 80866 0 12:41 ? 00:00:00 python main.py

符合预期。

- 另外,docker run命令也是可以指定容器内运行程序用户的,但是次用户必须是镜像内之前已存在的用户才行

1[root@docker flask-demo]#docker run -u xyy -d --name demo-test -p 8082:8080 flask-demo:v2

2\f7cd85c396fc769982fab012d32aa8cf979c49078503328c522c34c8b6f9f7fc

3docker: Error response from daemon: unable to find user xyy: no matching entries in passwd file.

4[root@docker flask-demo]#docker ps -a

5CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6f7cd85c396fc flask-demo:v2 "/bin/sh -c 'python …" 54 seconds ago Created 0.0.0.0:8082->8080/tcp, :::8082->8080/tcp demo-test

7ce527b2bb4b5 flask-demo:v2 "/bin/sh -c 'python …" 4 minutes ago Up 4 minutes 0.0.0.0:8081->8080/tcp, :::8081->8080/tcp demo-v2

8f3d7173e84ad flask-demo:v1 "/bin/sh -c 'python …" 15 minutes ago Up 15 minutes 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp demo-v1

9d83dbc25e4da kindest/node:v1.25.3 "/usr/local/bin/entr…" 2 months ago Up 2 months demo-worker2

108e32056c89da kindest/node:v1.25.3 "/usr/local/bin/entr…" 2 months ago Up 2 months demo-worker

11bf4947c5578a kindest/node:v1.25.3 "/usr/local/bin/entr…" 2 months ago Up 2 months 127.0.0.1:39911->6443/tcp demo-control-plane

测试结束。😘

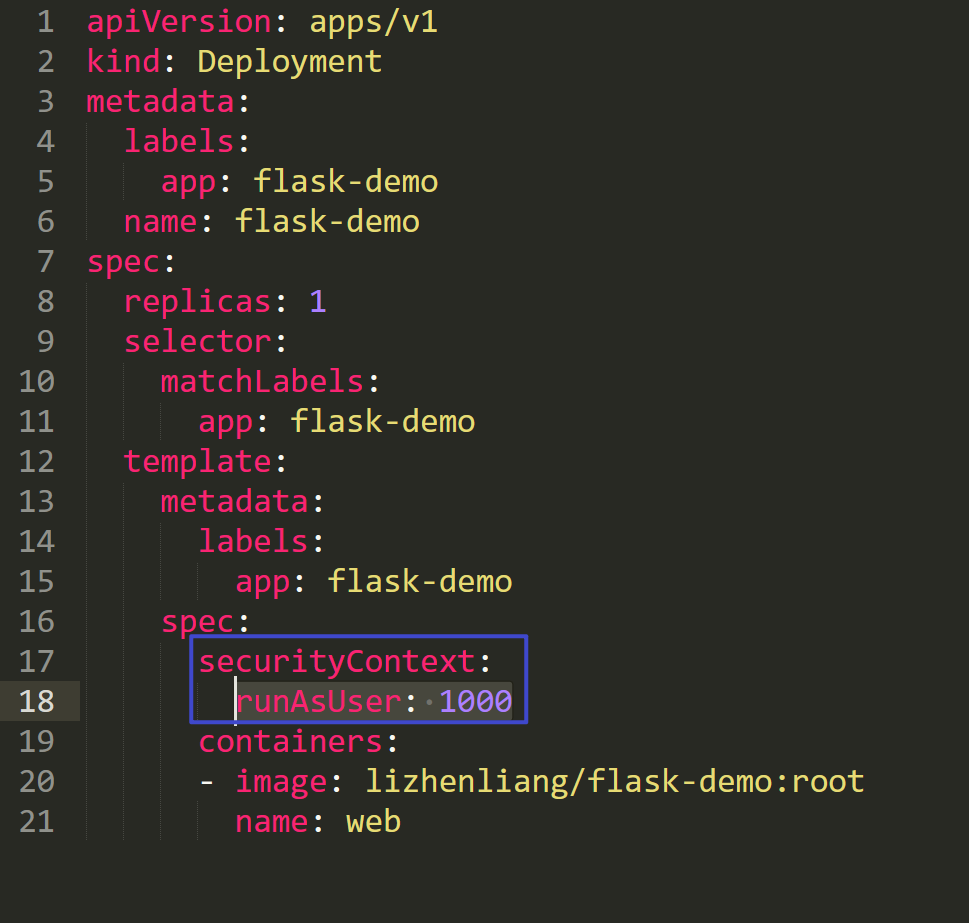

方法2:K8s里指定spec.securityContext.runAsUser,指定容器默认用户UID

- 部署pod

1mkdir /root/securityContext-runAsUser

2cd /root/securityContext-runAsUser

3[root@k8s-master1 securityContext-runAsUser]#kubectl create deployment flask-demo --image=nginx --dry-run=client -oyaml > deployment.yaml

4[root@k8s-master1 securityContext-runAsUser]#vim deployment.yaml

5apiVersion: apps/v1

6kind: Deployment

7metadata:

8 labels:

9 app: flask-demo

10 name: flask-demo

11spec:

12 replicas: 1

13 selector:

14 matchLabels:

15 app: flask-demo

16 template:

17 metadata:

18 labels:

19 app: flask-demo

20 spec:

21 securityContext:

22 runAsUser: 1000

23 containers:

24 - image: lizhenliang/flask-demo:root

25 name: web

说明:

1 lizhenliang/flask-demo:root #这个镜像标识容器里程序是以root身份运行的;

2 lizhenliang/flask-demo:noroot #这个镜像标识容器里程序是以1000身份运行的;

部署以上deployment:

1[root@k8s-master1 securityContext-runAsUser]#kubectl apply -f deployment.yaml

2deployment.apps/flask-demo created

- 查看

1[root@k8s-master1 securityContext-runAsUser]#kubectl get po

2NAME READY STATUS RESTARTS AGE

3flask-demo-6c78dcd8dd-j6s67 1/1 Running 0 82s

4

5[root@k8s-master1 securityContext-runAsUser]#kubectl exec -it flask-demo-6c78dcd8dd-j6s67 -- bash

6python@flask-demo-6c78dcd8dd-j6s67:/data/www$ id

7uid=1000(python) gid=1000(python) groups=1000(python)

8python@flask-demo-6c78dcd8dd-j6s67:/data/www$ ps -ef

9UID PID PPID C STIME TTY TIME CMD

10python 1 0 0 12:10 ? 00:00:00 /bin/sh -c python main.py

11python 7 1 0 12:10 ? 00:00:00 python main.py

12python 8 0 0 12:11 pts/0 00:00:00 bash

13python 15 8 0 12:11 pts/0 00:00:00 ps -ef

14python@flask-demo-6c78dcd8dd-j6s67:/data/www$

可以看到,此时容器是以spec.securityContext.runAsUser指定的用户来启动的,符合预期。

- 如果

spec.securityContext.runAsUser指定一个不存在的用户id,此时会发生什么现象?

1[root@k8s-master1 securityContext-runAsUser]#cp deployment.yaml deployment2.yaml

2[root@k8s-master1 securityContext-runAsUser]#vim deployment2.yaml

3apiVersion: apps/v1

4kind: Deployment

5metadata:

6 labels:

7 app: flask-demo

8 name: flask-demo2

9spec:

10 replicas: 1

11 selector:

12 matchLabels:

13 app: flask-demo

14 template:

15 metadata:

16 labels:

17 app: flask-demo

18 spec:

19 securityContext:

20 runAsUser: 1001

21 containers:

22 - image: lizhenliang/flask-demo:root

23 name: web

部署:

1[root@k8s-master1 securityContext-runAsUser]#kubectl apply -f deployment2.yaml

2deployment.apps/flask-demo2 created

查看:

1[root@k8s-master1 securityContext-runAsUser]#kubectl get po -owide

2NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

3flask-demo-6c78dcd8dd-j6s67 1/1 Running 0 6m35s 10.244.169.173 k8s-node2 <none> <none>

4flask-demo2-5d978567ff-tcqws 1/1 Running 0 18s 10.244.169.174 k8s-node2 <none> <none>

5

6[root@k8s-master1 securityContext-runAsUser]#kubectl exec -it flask-demo2-5d978567ff-tcqws -- bash

7I have no name!@flask-demo2-5d978567ff-tcqws:/data/www$

8I have no name!@flask-demo2-5d978567ff-tcqws:/data/www$ id

9uid=1001 gid=0(root) groups=0(root)

10I have no name!@flask-demo2-5d978567ff-tcqws:/data/www$ cat /etc/passwd|grep 1001

11I have no name!@flask-demo2-5d978567ff-tcqws:/data/www$ tail -4 /etc/passwd

12gnats:x:41:41:Gnats Bug-Reporting System (admin):/var/lib/gnats:/usr/sbin/nologin

13nobody:x:65534:65534:nobody:/nonexistent:/usr/sbin/nologin

14_apt:x:100:65534::/nonexistent:/usr/sbin/nologin

15python:x:1000:1000::/home/python:/bin/sh

16I have no name!@flask-demo2-5d978567ff-tcqws:/data/www$

可以看到,如果

spec.securityContext.runAsUser指定一个不存在的用户id,创建的pod不会报错,但容器里主机名称显示为I have no name!,但是系统下依旧会分配一个不存在的uid。

- 再测试下

lizhenliang/flask-demo:noroot镜像

1[root@k8s-master1 securityContext-runAsUser]#cp deployment2.yaml deployment3.yaml

2[root@k8s-master1 securityContext-runAsUser]#vim deployment3.yaml

3apiVersion: apps/v1

4kind: Deployment

5metadata:

6 labels:

7 app: flask-demo

8 name: flask-demo3

9spec:

10 replicas: 1

11 selector:

12 matchLabels:

13 app: flask-demo

14 template:

15 metadata:

16 labels:

17 app: flask-demo

18 spec:

19 #securityContext:

20 # runAsUser: 1001

21 containers:

22 - image: lizhenliang/flask-demo:noroot

23 name: web

部署:

1[root@k8s-master1 securityContext-runAsUser]#kubectl apply -f deployment3.yaml

2deployment.apps/flask-demo3 created

测试:

1[root@k8s-master1 securityContext-runAsUser]#kubectl get po

2NAME READY STATUS RESTARTS AGE

3flask-demo-6c78dcd8dd-j6s67 1/1 Running 0 14m

4flask-demo2-5d978567ff-tcqws 1/1 Running 0 8m4s

5flask-demo3-5fd4b7787c-cjjct 1/1 Running 0 84s

6

7[root@k8s-master1 securityContext-runAsUser]#kubectl exec -it flask-demo3-5fd4b7787c-cjjct -- bash

8python@flask-demo3-5fd4b7787c-cjjct:/data/www$ id

9uid=1000(python) gid=1000(python) groups=1000(python)

10python@flask-demo3-5fd4b7787c-cjjct:/data/www$ ps -ef

11UID PID PPID C STIME TTY TIME CMD

12python 1 0 0 12:23 ? 00:00:00 /bin/sh -c python main.py

13python 7 1 0 12:23 ? 00:00:00 python main.py

14python 8 0 0 12:23 pts/0 00:00:00 bash

15python 15 8 0 12:24 pts/0 00:00:00 ps -ef

16python@flask-demo3-5fd4b7787c-cjjct:/data/www$

符合预期。

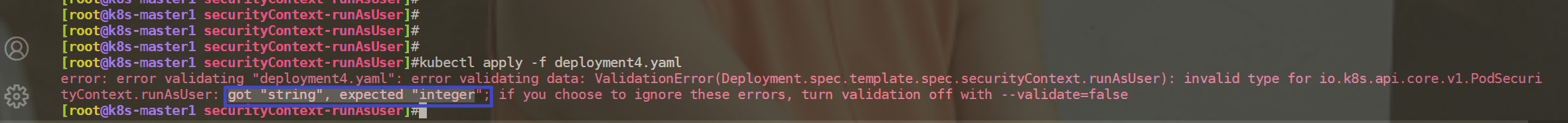

- 再测试:如果

spec.securityContext.runAsUser指定用户名时,此时会发生什么现象?

1[root@k8s-master1 securityContext-runAsUser]#cp deployment3.yaml deployment4.yaml

2[root@k8s-master1 securityContext-runAsUser]#vim deployment4.yaml

3apiVersion: apps/v1

4kind: Deployment

5metadata:

6 labels:

7 app: flask-demo

8 name: flask-demo4

9spec:

10 replicas: 1

11 selector:

12 matchLabels:

13 app: flask-demo

14 template:

15 metadata:

16 labels:

17 app: flask-demo

18 spec:

19 securityContext:

20 runAsUser: "python"

21 containers:

22 - image: lizhenliang/flask-demo:noroot

23 name: web

部署时,会直接报错的。

因此:

spec.securityContext.runAsUser这里必须指定为用户uid才行。

测试结束。😘

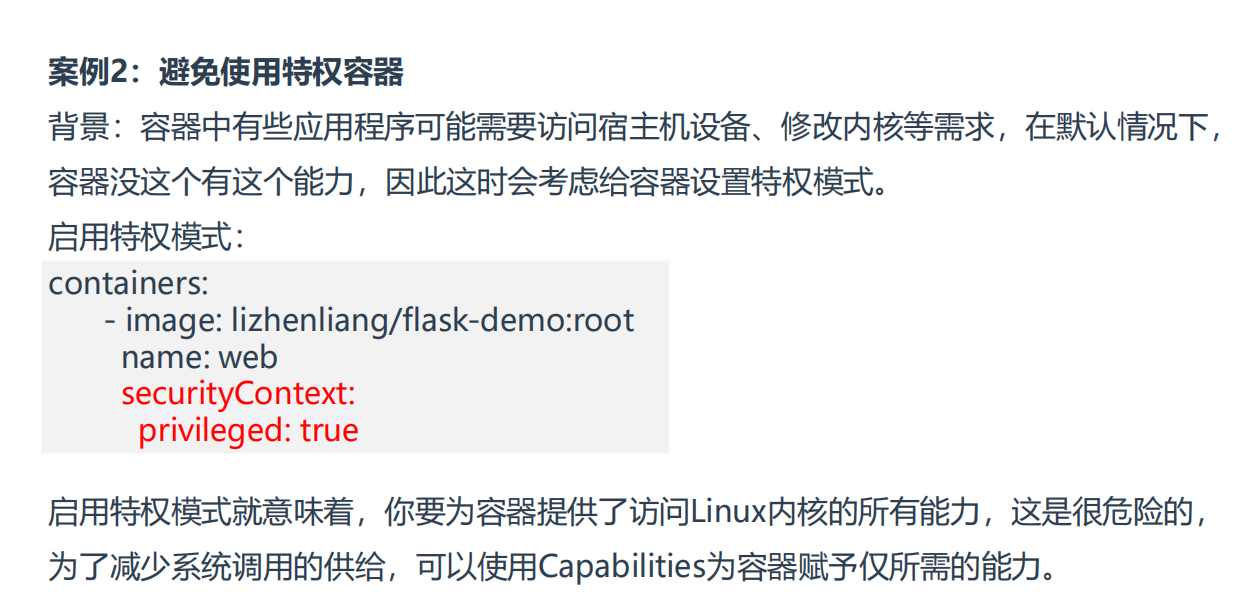

案例2:避免使用特权容器

相当于Capabilities对繁多的linux系统调用方法又做了一个归类。

需要注意:

1可以使用capsh命令来查询当前shell支持的一些能力有哪些?

2#宿主机上,centos系统

3[root@k8s-master1 Capabilities]#capsh --print

4Current: = cap_chown,cap_dac_override,cap_dac_read_search,cap_fowner,cap_fsetid,cap_kill,cap_setgid,cap_setuid,cap_setpcap,cap_linux_immutable,cap_net_bind_service,cap_net_broadcast,cap_net_admin,cap_net_raw,cap_ipc_lock,cap_ipc_owner,cap_sys_module,cap_sys_rawio,cap_sys_chroot,cap_sys_ptrace,cap_sys_pacct,cap_sys_admin,cap_sys_boot,cap_sys_nice,cap_sys_resource,cap_sys_time,cap_sys_tty_config,cap_mknod,cap_lease,cap_audit_write,cap_audit_control,cap_setfcap,cap_mac_override,cap_mac_admin,cap_syslog,35,36+ep

5Bounding set =cap_chown,cap_dac_override,cap_dac_read_search,cap_fowner,cap_fsetid,cap_kill,cap_setgid,cap_setuid,cap_setpcap,cap_linux_immutable,cap_net_bind_service,cap_net_broadcast,cap_net_admin,cap_net_raw,cap_ipc_lock,cap_ipc_owner,cap_sys_module,cap_sys_rawio,cap_sys_chroot,cap_sys_ptrace,cap_sys_pacct,cap_sys_admin,cap_sys_boot,cap_sys_nice,cap_sys_resource,cap_sys_time,cap_sys_tty_config,cap_mknod,cap_lease,cap_audit_write,cap_audit_control,cap_setfcap,cap_mac_override,cap_mac_admin,cap_syslog,35,36

6Securebits: 00/0x0/1'b0

7 secure-noroot: no (unlocked)

8 secure-no-suid-fixup: no (unlocked)

9 secure-keep-caps: no (unlocked)

10uid=0(root)

11gid=0(root)

12groups=0(root)

13

14#我们再来启动一个centos容器,在看下这些能力有哪些?

15[root@k8s-master1 Capabilities]#docker run -d centos sleep 24h

16Unable to find image 'centos:latest' locally

17latest: Pulling from library/centos

18a1d0c7532777: Pull complete

19Digest: sha256:a27fd8080b517143cbbbab9dfb7c8571c40d67d534bbdee55bd6c473f432b177

20Status: Downloaded newer image for centos:latest

21e69eddf42c4cc9c44d943786a2978cd55e3bdf68b9de23f6e221a2e44d8f63b0

22[root@k8s-master1 Capabilities]#docker exec -it e69eddf42c4cc9c44d943786a2978cd55e3bdf68b9de23f6e221a2e44d8f63b0 bash

23[root@e69eddf42c4c /]# capsh --print

24Current: = cap_chown,cap_dac_override,cap_fowner,cap_fsetid,cap_kill,cap_setgid,cap_setuid,cap_setpcap,cap_net_bind_service,cap_net_raw,cap_sys_chroot,cap_mknod,cap_audit_write,cap_setfcap+eip

25Bounding set =cap_chown,cap_dac_override,cap_fowner,cap_fsetid,cap_kill,cap_setgid,cap_setuid,cap_setpcap,cap_net_bind_service,cap_net_raw,cap_sys_chroot,cap_mknod,cap_audit_write,cap_setfcap

26Ambient set =

27Securebits: 00/0x0/1'b0

28 secure-noroot: no (unlocked)

29 secure-no-suid-fixup: no (unlocked)

30 secure-keep-caps: no (unlocked)

31 secure-no-ambient-raise: no (unlocked)

32uid=0(root)

33gid=0(root)

34groups=

35[root@e69eddf42c4c /]#

36

37#可以看到,启动的centos容器能力明显比宿主机上能力少好多。

==💘 案例:避免使用特权容器,选择使用capabilities-2023.5.30(测试成功)==

- 实验环境

1实验环境:

21、win10,vmwrokstation虚机;

32、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

4 k8s version:v1.20.0

5 docker://20.10.7

- 实验软件

1无。

- docker命令行参数里也是具有这个特权参数的

1[root@k8s-master1 ~]#docker run --help|grep privi

2 --privileged

- 之前也说过,即使是用root用户启动的容器进程,这个root用户具有的能力和宿主机的root能力还是差不少呢,因为处于安全考虑,容器引擎对这个做了一定的限制。

例如:我们在容器里,即使是root用户,也是无法使用mount命令的。

1[root@k8s-master1 ~]#kubectl get po

2NAME READY STATUS RESTARTS AGE

3busybox 1/1 Running 13 8d

4

5[root@k8s-master1 ~]#kubectl exec -it busybox -- sh

6/ # mount -t tmpfs /tmp /tmp

7mount: permission denied (are you root?)

8/ # id

9uid=0(root) gid=0(root) groups=10(wheel)

10/ #

11

12#当然宿主机里的root用户时可以正常使用mount命令的:

13[root@k8s-master1 ~]#ls /tmp/

142023.5.23-code 2023.5.23-code.tar.gz vmware-root_5805-1681267545

15[root@k8s-master1 ~]#mount -t tmpfs /tmp /tmp

16[root@k8s-master1 ~]#df -hT|grep tmp

17/tmp tmpfs 910M 0 910M 0% /tmp

- 开启特权功能,进行测试

1[root@k8s-master1 ~]#mkdir Capabilities

2[root@k8s-master1 ~]#cd Capabilities/

3[root@k8s-master1 Capabilities]#vim pod1.yaml

4apiVersion: v1

5kind: Pod

6metadata:

7 name: pod-sc1

8spec:

9 containers:

10 - image: busybox

11 name: web

12 command:

13 - sleep

14 - 24h

15 securityContext:

16 privileged: true

17

18#部署:

19[root@k8s-master1 Capabilities]#kubectl apply -f pod1.yaml

20pod/pod-sc1 created

21

22#测试:

23[root@k8s-master1 Capabilities]#kubectl get po

24NAME READY STATUS RESTARTS AGE

25pod-sc1 1/1 Running 0 61s

26[root@k8s-master1 Capabilities]#kubectl exec -it pod-sc1 -- sh

27/ #

28/ # mount -t tmpfs /tmp /tmp

29/ # id

30uid=0(root) gid=0(root) groups=10(wheel)

31/ # df -hT|grep tmp

32tmpfs tmpfs 64.0M 0 64.0M 0% /dev

33tmpfs tmpfs 909.8M 0 909.8M 0% /sys/fs/cgroup

34shm tmpfs 64.0M 0 64.0M 0% /dev/shm

35tmpfs tmpfs 909.8M 12.0K 909.8M 0% /var/run/secrets/kubernetes.io/serviceaccount

36/tmp tmpfs 909.8M 0 909.8M 0% /tmp

37/ # ls /tmp/

38/ #

可以看到,开启了特权功能后,容器就可以正常使用mount命令来挂载文件系统了。

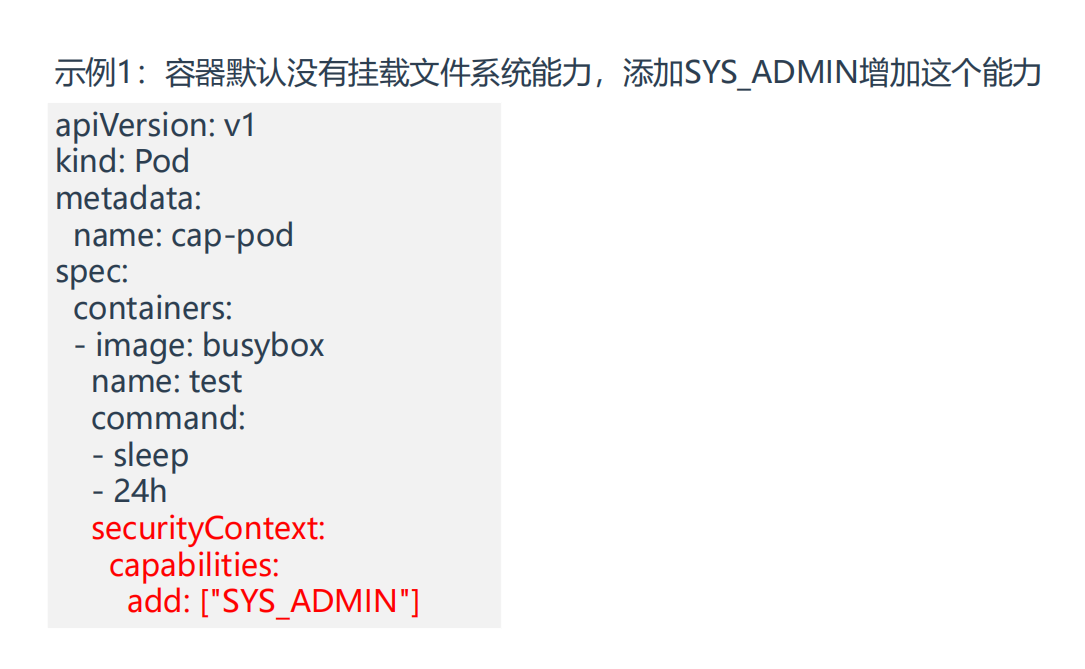

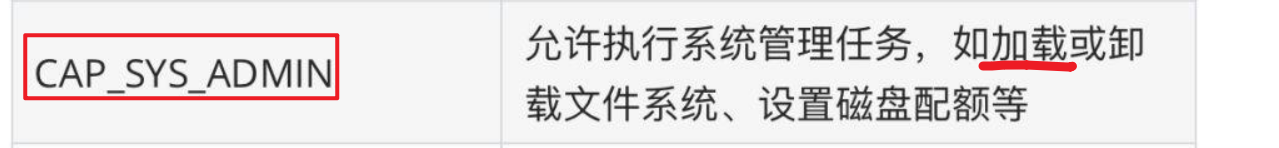

- 现在,我们来避免使用特权容器,选择使用

SYS_ADMIN这个capabilities来解决以上这个问题

SYS_ADMIN能力作用如下:

部署下pod:

1[root@k8s-master1 Capabilities]#cp pod1.yaml pod2.yaml

2[root@k8s-master1 Capabilities]#vim pod2.yaml

3apiVersion: v1

4kind: Pod

5metadata:

6 name: pod-sc2

7spec:

8 containers:

9 - image: busybox

10 name: web

11 command:

12 - sleep

13 - 24h

14 securityContext:

15 capabilities:

16 add: ["SYS_ADMIN"]

17 #这里需要注意的是:这里写 "SYS_ADMIN"或者 "CAP_SYS_ADMIN" 都是可以的,即:CAP关键字是可以省略的。

18

19

20#部署:

21[root@k8s-master1 Capabilities]#kubectl apply -f pod2.yaml

22pod/pod-sc2 created

23

24#测试:

25[root@k8s-master1 Capabilities]#kubectl get po

26NAME READY STATUS RESTARTS AGE

27pod-sc1 1/1 Running 0 9m9s

28pod-sc2 1/1 Running 0 36s

29[root@k8s-master1 Capabilities]#kubectl exec -it pod-sc2 -- sh

30/ #

31/ # mount -t tmpfs /tmp /tmp

32/ # id

33uid=0(root) gid=0(root) groups=10(wheel)

34/ # df -hT|grep tmp

35/tmp tmpfs 909.8M 0 909.8M 0% /tmp

36/ #

此时,容器配置了SYS_ADMIN这个capabilities后,也具有了mount权限了,符合预期。😘

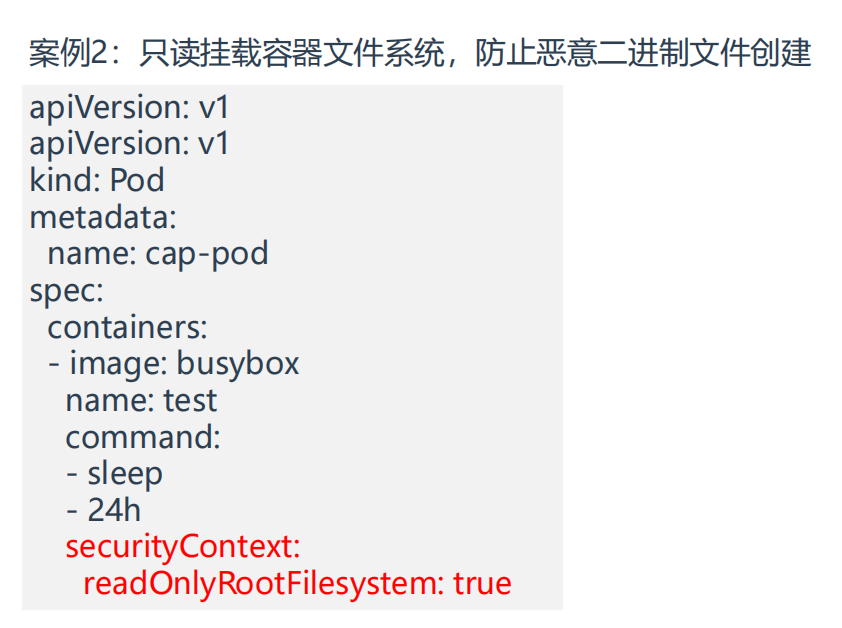

案例3:只读挂载容器文件系统

==💘 案例:只读挂载容器文件系统-2023.5.30(测试成功)==

- 实验环境

1实验环境:

21、win10,vmwrokstation虚机;

32、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

4 k8s version:v1.20.0

5 docker://20.10.7

- 实验软件

1无。

- 默认创建的pod,是可以在容器里随意创建文件的

1[root@k8s-master1 Capabilities]#kubectl exec -it pod-sc2 -- sh

2/ #

3/ # touch 1

4/ # ls

51 bin dev etc home lib lib64 proc root sys tmp usr var

6/ #

- 但是,处于安全需求,我们不希望程序对容器里的任何文件进行更改,此时该如何解决呢?

就需要用到只读挂载容器文件系统功能了,接下来我们测试下。

1[root@k8s-master1 Capabilities]#cp pod2.yaml pod3.yaml

2[root@k8s-master1 Capabilities]#vim pod3.yaml

3apiVersion: v1

4kind: Pod

5metadata:

6 name: pod-sc3

7spec:

8 containers:

9 - image: busybox

10 name: web

11 command:

12 - sleep

13 - 24h

14 securityContext:

15 readOnlyRootFilesystem: true

16

17#部署:

18[root@k8s-master1 Capabilities]#kubectl apply -f pod3.yaml

19pod/pod-sc3 created

20

21#测试:

22[root@k8s-master1 Capabilities]#kubectl get po |grep pod-sc

23pod-sc1 1/1 Running 0 17m

24pod-sc2 1/1 Running 0 9m5s

25pod-sc3 1/1 Running 0 52s

26[root@k8s-master1 Capabilities]#kubectl exec -it pod-sc3 -- sh

27/ #

28/ # ls

29bin dev etc home lib lib64 proc root sys tmp usr var

30/ # touch a

31touch: a: Read-only file system

32/ # mkdir a

33mkdir: can't create directory 'a': Read-only file system

34/ #

pod里配置了只读挂载容器文件系统功能后,此时容器内就无法创建任何文件了,也无法修改任何文件,以上问题需求已实现,测试结束。😘

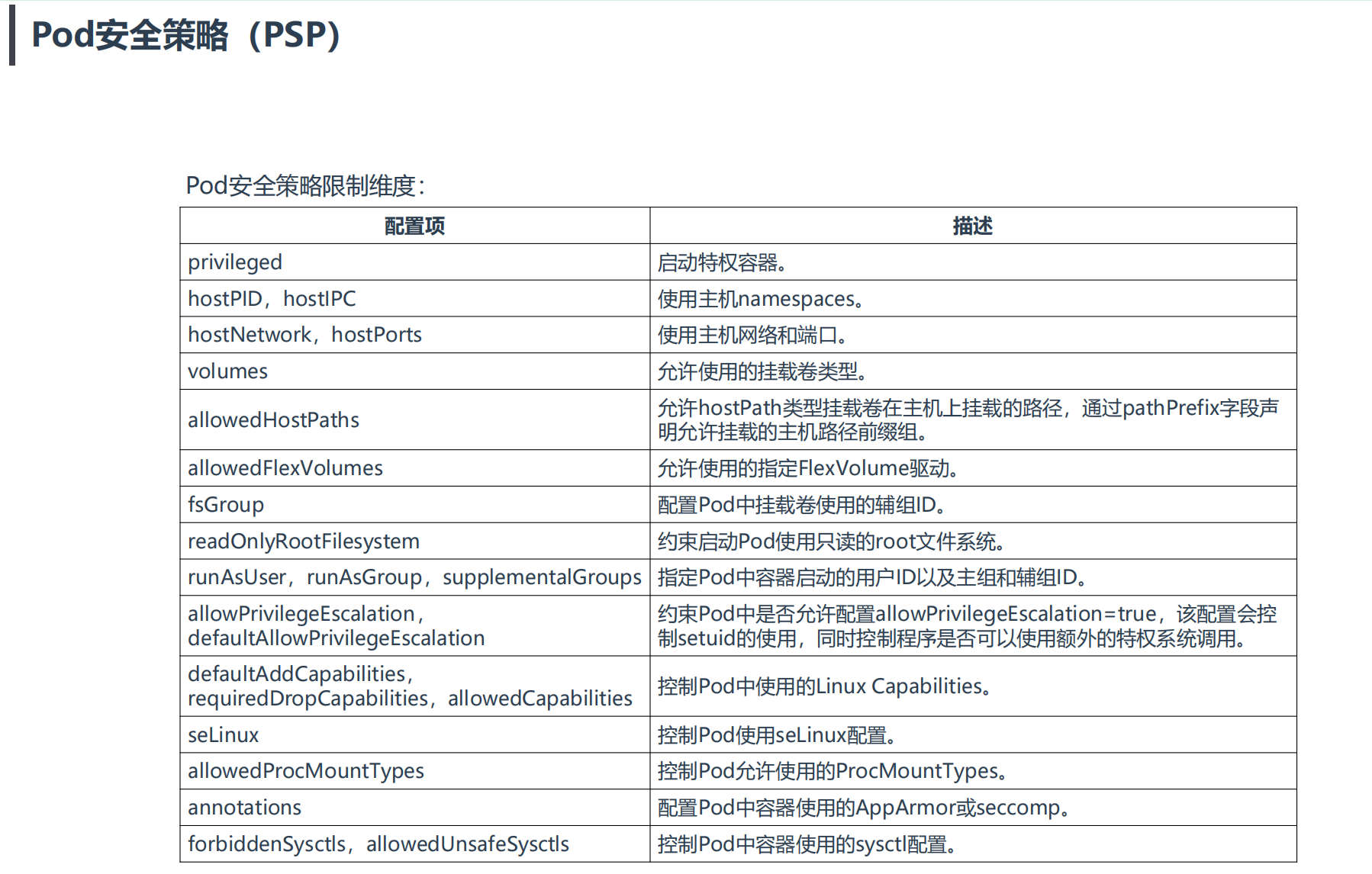

2、Pod安全策略(PSP)

**PodSecurityPolicy(简称PSP):**Kubernetes中Pod部署时重要的安全校验手段,能够有效地约束应用运行时行为安全。

使用PSP对象定义一组Pod在运行时必须遵循的条件及相关字段的默认值,只有Pod满足这些条件才会被K8s接受。

用户使用SA (ServiceAccount)创建了一个Pod,K8s会先验证这个SA是否可以访问PSP资源权限,如果可以进一步验证Pod配置是否满足PSP规则,任

意一步不满足都会拒绝部署。因此,需要实施需要有这几点:

• 创建SA服务账号

• 该SA需要具备创建对应资源权限,例如创建Pod、Deployment

• SA使用PSP资源权限:创建Role,使用PSP资源权限,再将SA绑定Role

==💘 实战:Pod安全策略-2023.5.31(测试成功)==

- 实验环境

1实验环境:

21、win10,vmwrokstation虚机;

32、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

4 k8s version:v1.20.0

5 docker://20.10.7

- 实验软件

1链接:https://pan.baidu.com/s/14gKA3TVzy9wQLk2aCCKw_g?pwd=0820

2提取码:0820

32023.5.31-psp-code

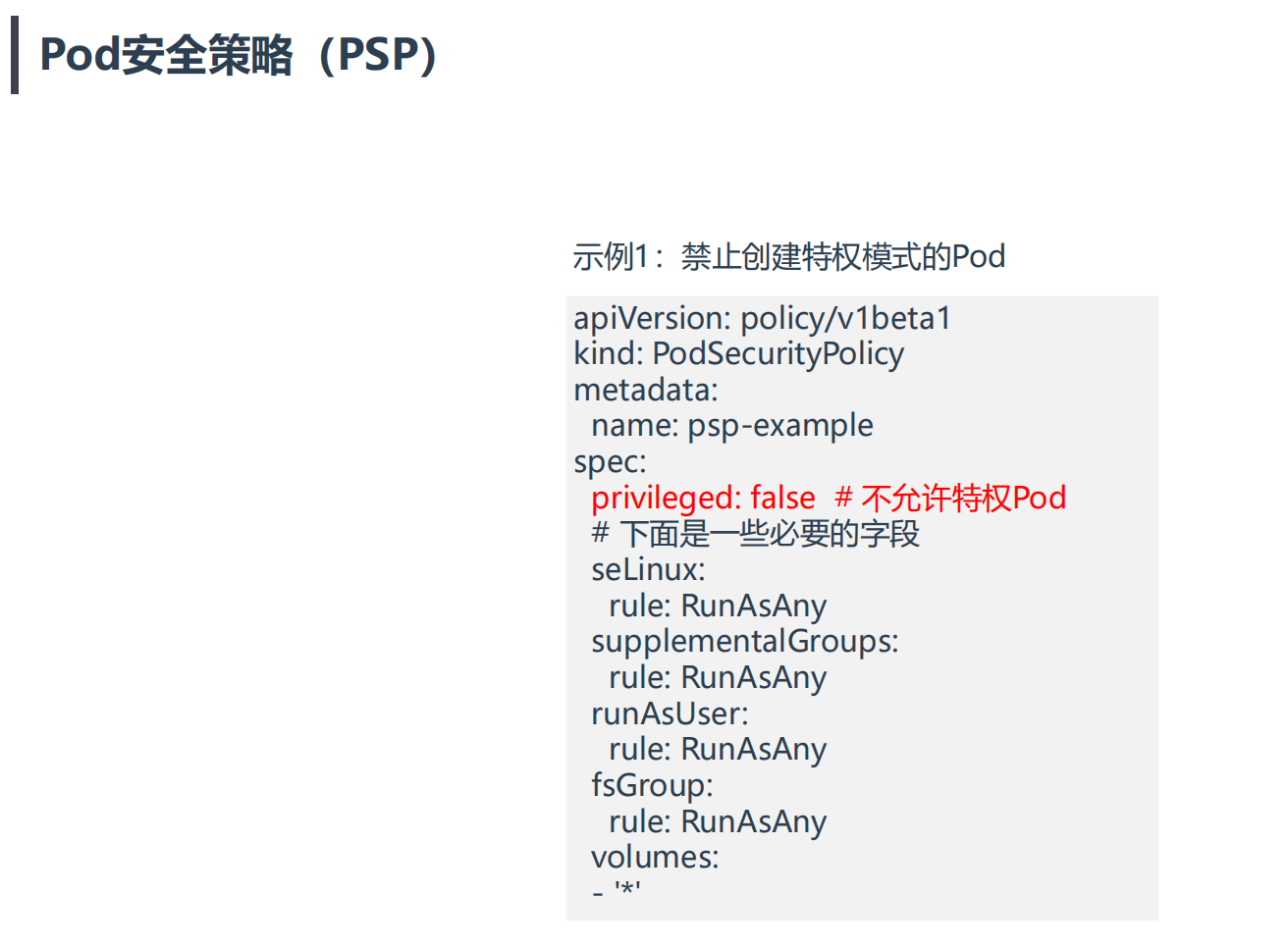

案例1:禁止创建特权模式的Pod

1示例1:禁止创建特权模式的Pod

2# 创建SA

3kubectl create serviceaccount aliang6

4# 将SA绑定到系统内置Role

5kubectl create rolebinding aliang6 --clusterrole=edit --serviceaccount=default:aliang6

6# 创建使用PSP权限的Role

7kubectl create role psp:unprivileged --verb=use --resource=podsecuritypolicy --resource-name=psp-example

8# 将SA绑定到Role

9kubectl create rolebinding aliang:psp:unprivileged --role=psp:unprivileged --serviceaccount=default:aliang6

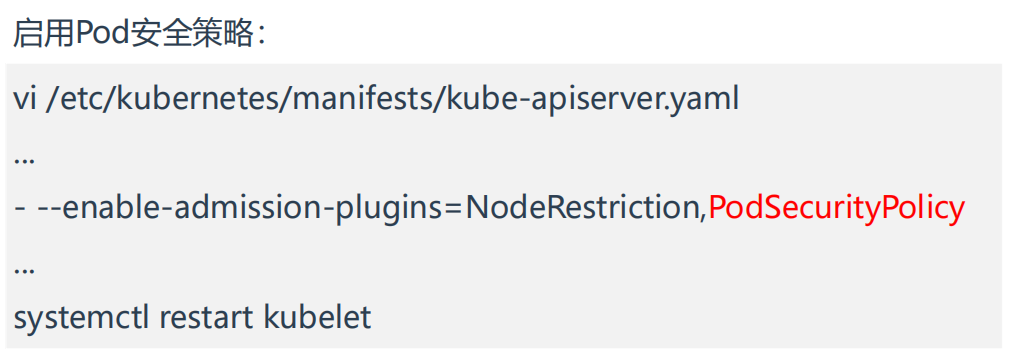

1.k8s集群启用Pod安全策略

Pod安全策略实现为一个准入控制器,默认没有启用,当启用后会强制实施Pod安全策略,没有满足的Pod将无法创建。因此,建议在启用PSP之前先添加策略并对其授权。

PodSecurityPolicy

1[root@k8s-master1 ~]#vim /etc/kubernetes/manifests/kube-apiserver.yaml

2将

3- --enable-admission-plugins=NodeRestriction

4替换为

5- --enable-admission-plugins=NodeRestriction,PodSecurityPolicy

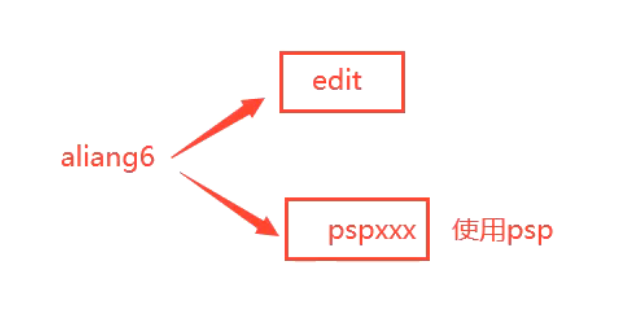

2.创建sa,并将SA绑定到系统内置Role

1[root@k8s-master1 ~]#kubectl create serviceaccount aliang6^C

2[root@k8s-master1 ~]#mkdir psp

3[root@k8s-master1 ~]#cd psp/

4[root@k8s-master1 psp]#kubectl create serviceaccount aliang6

5serviceaccount/aliang6 created

6

7[root@k8s-master1 psp]#kubectl create rolebinding aliang6 --clusterrole=edit --serviceaccount=default:aliang6

8rolebinding.rbac.authorization.k8s.io/aliang6 created

9

10#说明:edit这个clusterrole基本具有很多权限,除了自己不能修改权限外。

11[root@k8s-master1 psp]#kubectl get clusterrole|grep -v system:

12NAME CREATED AT

13admin 2022-10-22T02:34:47Z

14calico-kube-controllers 2022-10-22T02:41:12Z

15calico-node 2022-10-22T02:41:12Z

16cluster-admin 2022-10-22T02:34:47Z

17edit 2022-10-22T02:34:47Z

18ingress-nginx 2022-11-29T11:28:49Z

19kubeadm:get-nodes 2022-10-22T02:34:48Z

20kubernetes-dashboard 2022-10-22T02:42:46Z

21view 2022-10-22T02:34:47Z

3.配置下psp策略

1[root@k8s-master1 psp]#vim psp.yaml

2apiVersion: policy/v1beta1

3kind: PodSecurityPolicy

4metadata:

5 name: psp-example

6spec:

7 privileged: false # 不允许特权Pod

8 # 下面是一些必要的字段

9 seLinux:

10 rule: RunAsAny

11 supplementalGroups:

12 rule: RunAsAny

13 runAsUser:

14 rule: RunAsAny

15 fsGroup:

16 rule: RunAsAny

17 volumes:

18 - '*'

19

20#部署:

21[root@k8s-master1 psp]#kubectl apply -f psp.yaml

22podsecuritypolicy.policy/psp-example created

4.创建资源测试

这创建deployment测试下:

1[root@k8s-master1 psp]#kubectl --as=system:serviceaccount:default:aliang6 create deployment web --image=nginx

2deployment.apps/web created

3[root@k8s-master1 psp]#kubectl get deploy

4NAME READY UP-TO-DATE AVAILABLE AGE

5web 0/1 0 0 6s

6[root@k8s-master1 psp]#kubectl get pod|grep web

7#无输出

可以看到,Deployment资源被创建了,但是pod没有被创建成功。

再创建pod测试下:

1[root@k8s-master1 psp]#kubectl --as=system:serviceaccount:default:aliang6 run web --image=nginx

2Error from server (Forbidden): pods "web" is forbidden: PodSecurityPolicy: unable to admit pod: []

能够看到,创建deployment和pod资源均失败。这是因为我们还需要对aliang6这个sa赋予访问psp资源的权限才行。

5.创建使用PSP权限的Role,并将SA绑定到Role

1# 创建使用PSP权限的Role

2[root@k8s-master1 psp]#kubectl create role psp:unprivileged --verb=use --resource=podsecuritypolicy --resource-name=psp-example

3role.rbac.authorization.k8s.io/psp:unprivileged created

4# 将SA绑定到Role

5[root@k8s-master1 psp]#kubectl create rolebinding aliang6:psp:unprivileged --role=psp:unprivileged --serviceaccount=default:aliang6

6rolebinding.rbac.authorization.k8s.io/aliang6:psp:unprivileged created

6.再一次进行测试

创建具有特权权限pod测试:

1[root@k8s-master1 psp]#kubectl run pod-psp --image=busybox --dry-run=client -oyaml > pod.yaml

2[root@k8s-master1 psp]#vim pod.yaml

3apiVersion: v1

4kind: Pod

5metadata:

6 name: pod-psp

7spec:

8 containers:

9 - image: busybox

10 name: pod-psp

11 command:

12 - sleep

13 - 24h

14 securityContext:

15 privileged: true

16

17#部署

18[root@k8s-master1 psp]#kubectl --as=system:serviceaccount:default:aliang6 apply -f pod.yaml

19Error from server (Forbidden): error when creating "pod.yaml": pods "pod-psp" is forbidden: PodSecurityPolicy: unable to admit pod: [spec.containers[0].securityContext.privileged: Invalid value: true: Privileged containers are not allowed]

可以看到,psp策略起作用了,禁止pod的创建。

此时,将pod里面的特权给禁用掉,再次创建,观察现象。

1[root@k8s-master1 psp]#vim pod.yaml

2apiVersion: v1

3kind: Pod

4metadata:

5 name: pod-psp

6spec:

7 containers:

8 - image: busybox

9 name: pod-psp

10 command:

11 - sleep

12 - 24h

13

14#部署

15[root@k8s-master1 psp]#kubectl --as=system:serviceaccount:default:aliang6 apply -f pod.yaml

16pod/pod-psp created

17[root@k8s-master1 psp]#kubectl get po|grep pod-psp

18pod-psp 1/1 Running 0 19s

此时,未启用特权的pod是可以被成功创建的。

此时,利用再创建下pod,deployment看下现象。

1#创建pod

2[root@k8s-master1 psp]#kubectl --as=system:serviceaccount:default:aliang6 run web10 --image=nginx

3pod/web10 created

4[root@k8s-master1 psp]#kubectl get po|grep web10

5web10 1/1 Running 0 13s

6

7#创建deployment

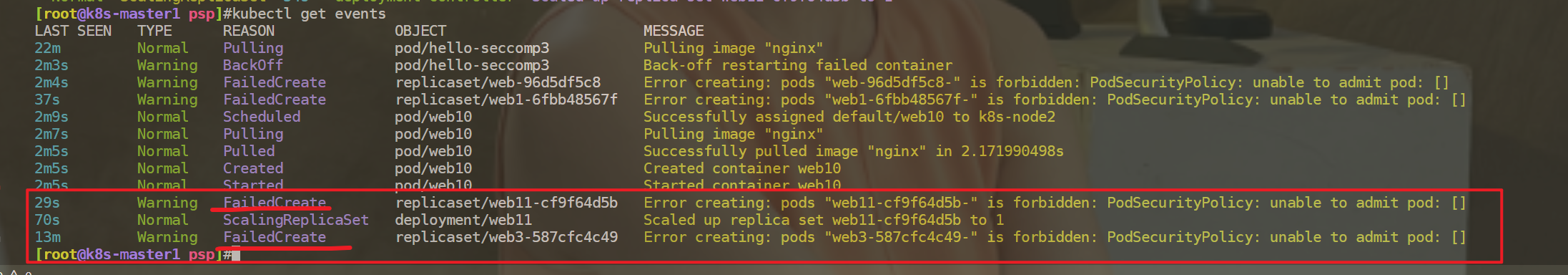

8[root@k8s-master1 psp]#kubectl --as=system:serviceaccount:default:aliang6 create deployment web11 --image=nginx

9deployment.apps/web11 created

10[root@k8s-master1 psp]#kubectl get deployment |grep web11

11web11 0/1 0 0 38s

12[root@k8s-master1 psp]#kubectl get events

通过查看events日志,可以发现创建deployment报错的原因。

Repliaset是没这个权限,replicaset使用的是默认这个sa账号的权限。

1[root@k8s-master1 psp]#kubectl get sa

2NAME SECRETS AGE

3aliang6 1 5h15m

4default 1 221d

现在要做的就是让这个默认sa具有访问psp权限就可以了。

有2种方法:

创建deploy时指定默认的sa为aliang账号;

用命令给默认sa赋予访问psp权限;

我们来使用第二种方法:

1[root@k8s-master1 psp]#kubectl create rolebinding default:psp:unprivileged --role=psp:unprivileged --serviceaccount=default:default

2rolebinding.rbac.authorization.k8s.io/default:psp:unprivileged created

配置完成后,查看:

1[root@k8s-master1 psp]#kubectl --as=system:serviceaccount:default:aliang6 create deployment web12 --image=nginx

2deployment.apps/web12 created

3[root@k8s-master1 psp]#kubectl get deployment|grep web12

4web12 1/1 1 1 11s

5[root@k8s-master1 psp]#kubectl get po|grep web12

6web12-7b88cfd55f-9z5hz 1/1 Running 0 20s

此时,就可以正常部署deployment了。

测试结束。😘

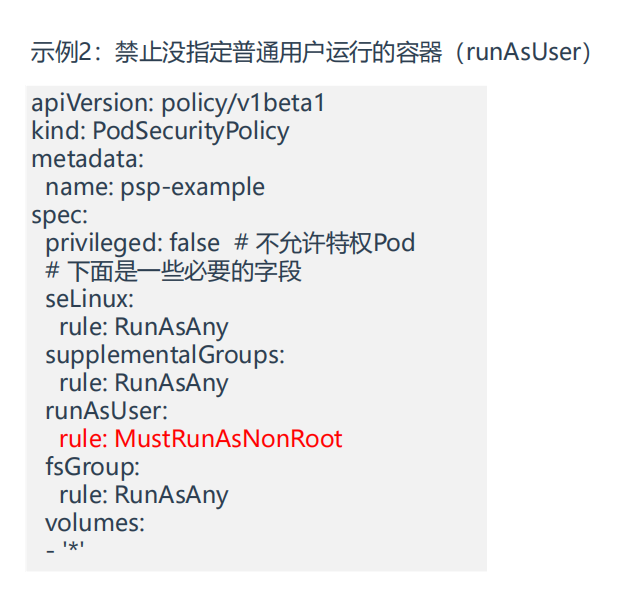

示例2:禁止没指定普通用户运行的容器

- 我们在上面psp策略基础上重新配置下策略

1[root@k8s-master1 psp]#vim psp.yaml

2apiVersion: policy/v1beta1

3kind: PodSecurityPolicy

4metadata:

5 name: psp-example

6spec:

7 privileged: false # 不允许特权Pod

8 # 下面是一些必要的字段

9 seLinux:

10 rule: RunAsAny

11 supplementalGroups:

12 rule: RunAsAny

13 runAsUser:

14 rule: MustRunAsNonRoot

15 fsGroup:

16 rule: RunAsAny

17 volumes:

18 - '*'

19

20#部署

21[root@k8s-master1 psp]#kubectl apply -f psp.yaml

22podsecuritypolicy.policy/psp-example configured

- 部署测试pod

1[root@k8s-master1 psp]#cp pod.yaml pod2.yaml

2[root@k8s-master1 psp]#vim pod2.yaml

3apiVersion: v1

4kind: Pod

5metadata:

6 name: pod-psp2

7spec:

8 containers:

9 - image: lizhenliang/flask-demo:root

10 name: web

11 securityContext:

12 runAsUser: 1000

13

14#部署

15[root@k8s-master1 psp]#kubectl apply -f pod2.yaml

16pod/pod-psp2 created

17

18#验证

19[root@k8s-master1 psp]#kubectl get po|grep pod-psp2

20pod-psp2 1/1 Running 0 40s

21[root@k8s-master1 psp]#kubectl exec -it pod-psp2 -- bash

22python@pod-psp2:/data/www$ id

23uid=1000(python) gid=1000(python) groups=1000(python)

24python@pod-psp2:/data/www$

25

26#如果用命令行再创建一个pod测试

27[root@k8s-master1 psp]#kubectl --as=system:serviceaccount:default:aliang6 run web22 --image=nginx

28pod/web22 created

29[root@k8s-master1 psp]#kubectl get po|grep web22

30web22 0/1 CreateContainerConfigError 0 15s

31[root@k8s-master1 psp]#kubectl describe po web22

32Events:

33 Type Reason Age From Message

34 ---- ------ ---- ---- -------

35 Normal Scheduled 32s default-scheduler Successfully assigned default/web22 to k8s-node2

36 Normal Pulled 28s kubelet Successfully pulled image "nginx" in 1.756553796s

37 Normal Pulled 25s kubelet Successfully pulled image "nginx" in 2.394107372s

38 Warning Failed 11s (x3 over 28s) kubelet Error: container has runAsNonRoot and image will run as root (pod: "web22_default(0db376b0-0da9-4119-97a1-091ed8798159)", container: web22)

39 #可以看到,这里策略起作用了,符合预期。

测试结束。😘

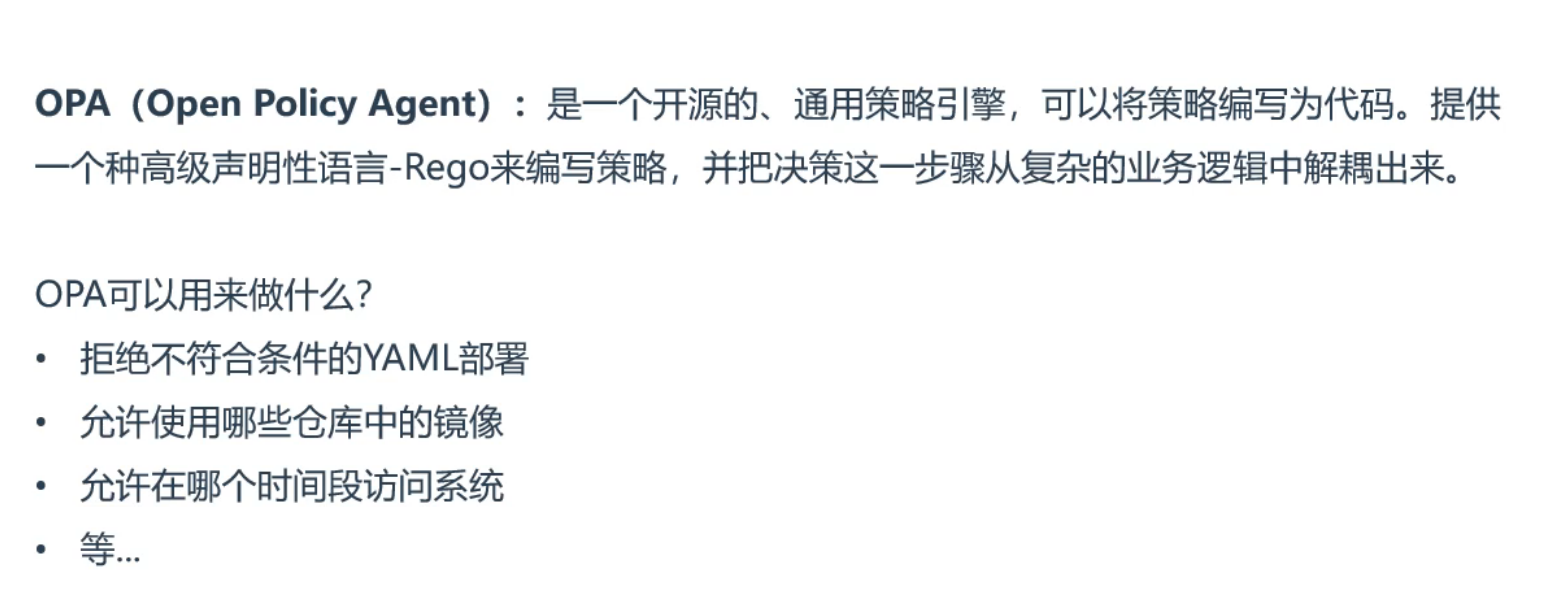

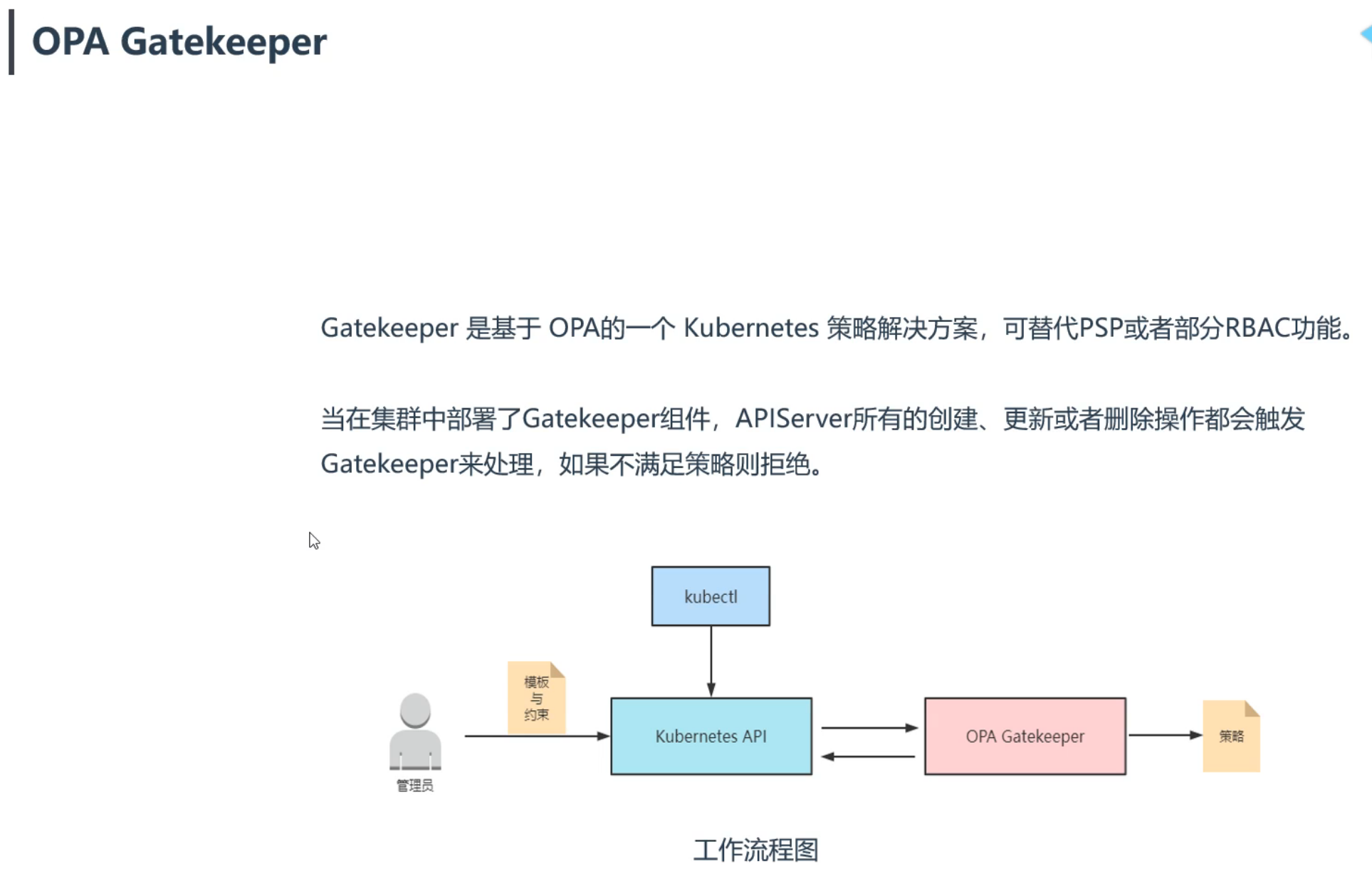

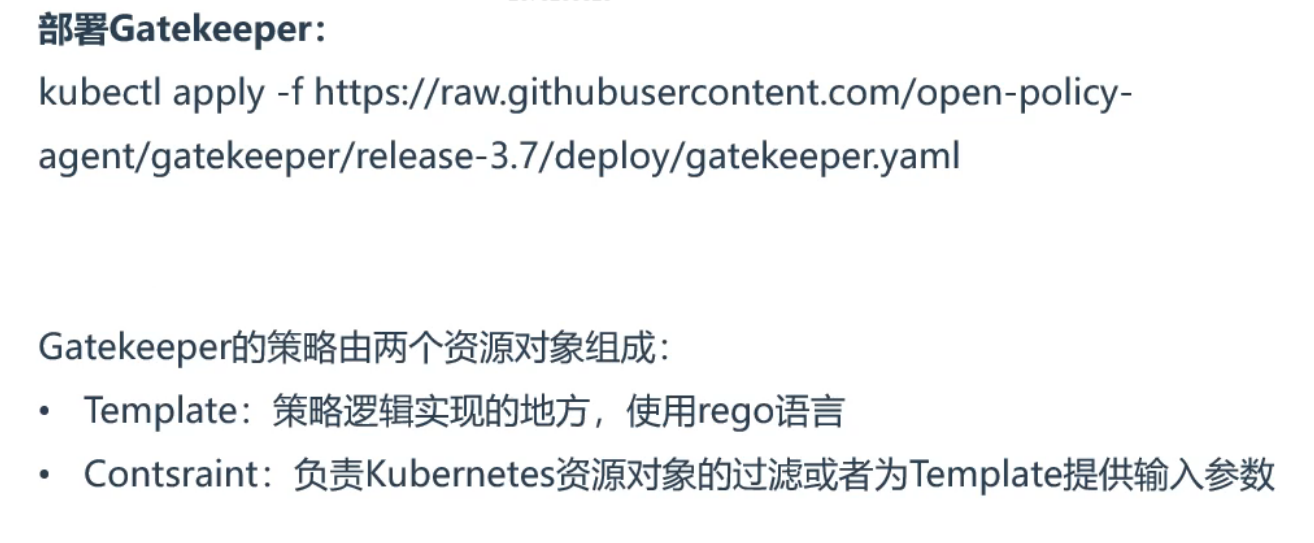

3、OPA Gatekeeper策略引擎

- OPA介绍

奔用文章: https://kubernetes.io/blog/2021/04/06/podsecuritypolicy-deprecation-past-present-and-future/

替代提案: https://github.com/kubernetes/enhancements/issues/2579

- OPA Gatekeeper

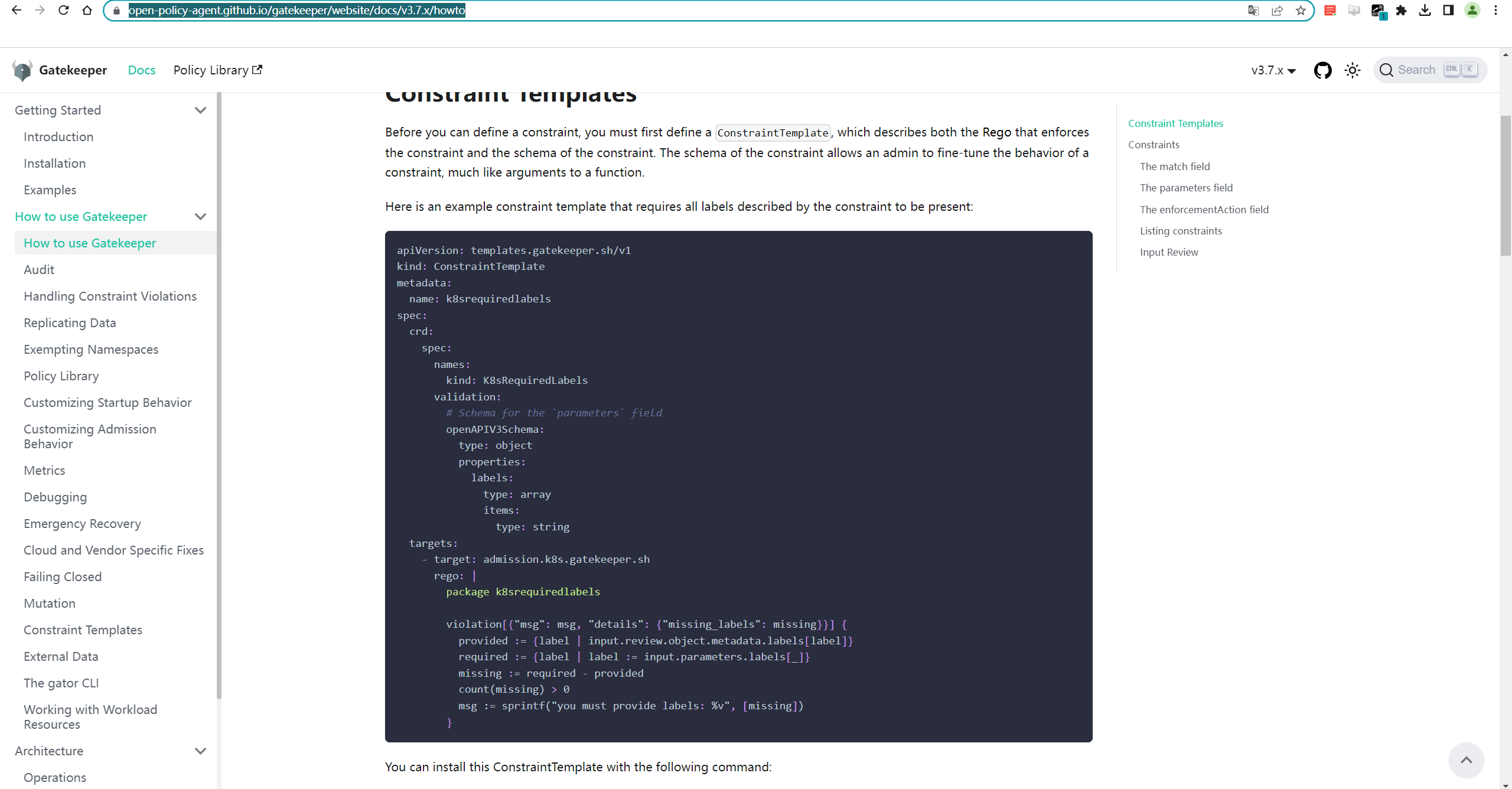

- opa Gatekeeper官方文档

https://open-policy-agent.github.io/gatekeeper/website/docs/v3.7.x/howto

https://github.com/open-policy-agent/gatekeeper

https://open-policy-agent.github.io/gatekeeper/website/docs/install/

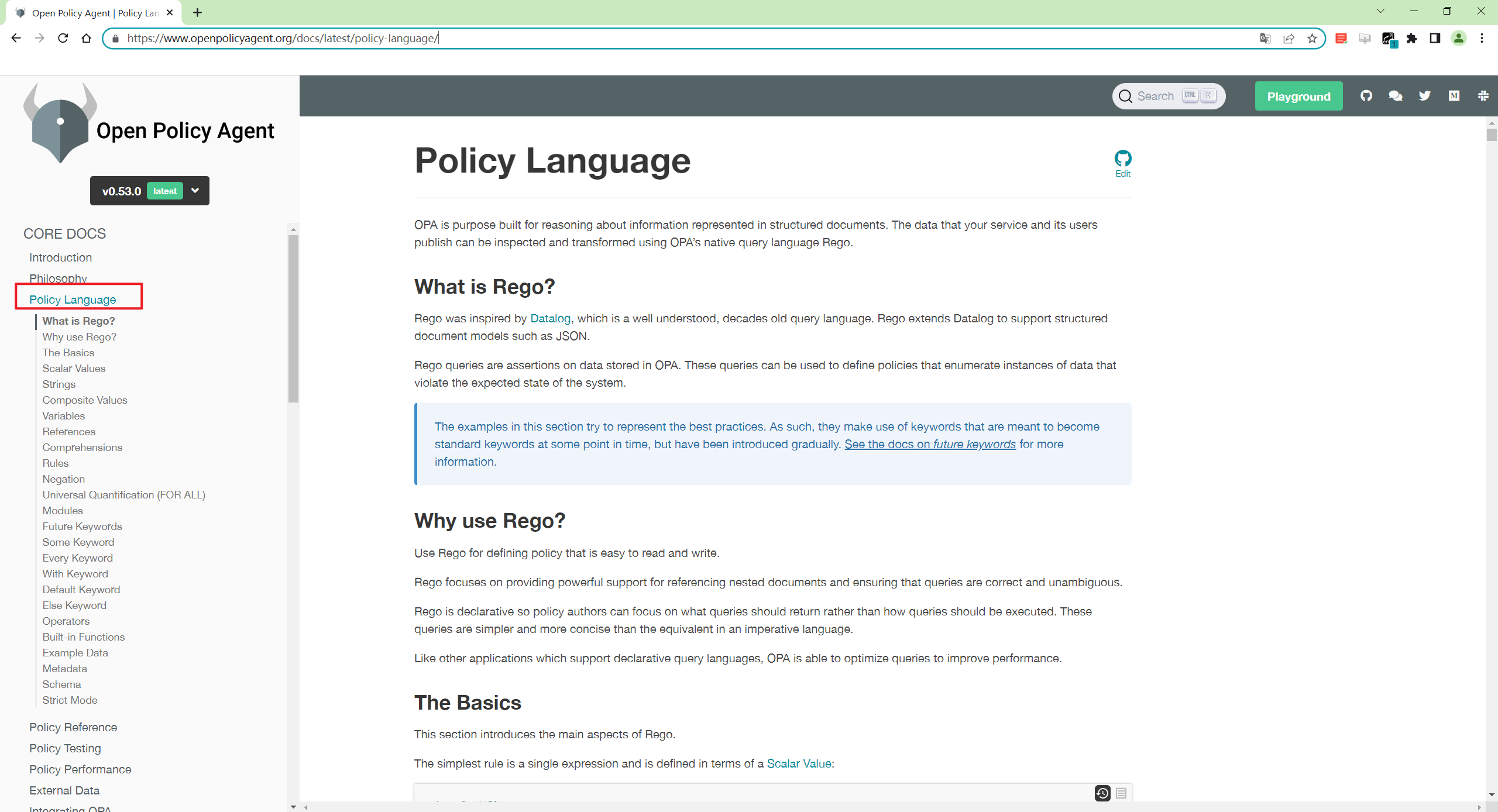

- Open Policy Agent(opa语言)

https://www.openpolicyagent.org/docs/latest/policy-language/

部署Gatekeeper

==💘 实战:部署Gatekeeper-2023.6.1(测试成功)==

- 实验环境

1实验环境:

21、win10,vmwrokstation虚机;

32、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

4 k8s version:v1.20.0

5 docker://20.10.7

6 https://raw.githubusercontent.com/open-policy-agent/gatekeeper/release-3.7/deploy/gatekeeper.yaml

- 实验软件

1链接:https://pan.baidu.com/s/1js_WMXJuiNvXxBtBP9PBcg?pwd=0820

2提取码:0820

32023.6.1-实战:部署Gatekeeper(测试成功)

1、关闭psp策略(如果存在的话)

1[root@k8s-master1 ~]#vim /etc/kubernetes/manifests/kube-apiserver.yaml

2将

3- --enable-admission-plugins=NodeRestriction,PodSecurityPolicy

4替换为

5- --enable-admission-plugins=NodeRestriction

2、安装opa gatekeeper

1[root@k8s-master1 ~]#mkdir opa

2[root@k8s-master1 ~]#cd opa/

3[root@k8s-master1 opa]#wget https://raw.githubusercontent.com/open-policy-agent/gatekeeper/release-3.7/deploy/gatekeeper.yaml

4[root@k8s-master1 opa]#kubectl apply -f gatekeeper.yaml

5namespace/gatekeeper-system created

6resourcequota/gatekeeper-critical-pods created

7customresourcedefinition.apiextensions.k8s.io/assign.mutations.gatekeeper.sh created

8customresourcedefinition.apiextensions.k8s.io/assignmetadata.mutations.gatekeeper.sh created

9customresourcedefinition.apiextensions.k8s.io/configs.config.gatekeeper.sh created

10customresourcedefinition.apiextensions.k8s.io/constraintpodstatuses.status.gatekeeper.sh created

11customresourcedefinition.apiextensions.k8s.io/constrainttemplatepodstatuses.status.gatekeeper.sh created

12customresourcedefinition.apiextensions.k8s.io/constrainttemplates.templates.gatekeeper.sh created

13customresourcedefinition.apiextensions.k8s.io/modifyset.mutations.gatekeeper.sh created

14customresourcedefinition.apiextensions.k8s.io/mutatorpodstatuses.status.gatekeeper.sh created

15customresourcedefinition.apiextensions.k8s.io/providers.externaldata.gatekeeper.sh created

16serviceaccount/gatekeeper-admin created

17podsecuritypolicy.policy/gatekeeper-admin created

18role.rbac.authorization.k8s.io/gatekeeper-manager-role created

19clusterrole.rbac.authorization.k8s.io/gatekeeper-manager-role created

20rolebinding.rbac.authorization.k8s.io/gatekeeper-manager-rolebinding created

21clusterrolebinding.rbac.authorization.k8s.io/gatekeeper-manager-rolebinding created

22secret/gatekeeper-webhook-server-cert created

23service/gatekeeper-webhook-service created

24deployment.apps/gatekeeper-audit created

25deployment.apps/gatekeeper-controller-manager created

26poddisruptionbudget.policy/gatekeeper-controller-manager created

27mutatingwebhookconfiguration.admissionregistration.k8s.io/gatekeeper-mutating-webhook-configuration created

28validatingwebhookconfiguration.admissionregistration.k8s.io/gatekeeper-validating-webhook-configuration created

29[root@k8s-master1 opa]#

30

31#查看

32[root@k8s-master1 opa]#kubectl get po -ngatekeeper-system

33NAME READY STATUS RESTARTS AGE

34gatekeeper-audit-5b869c66f9-6qvs5 1/1 Running 0 76s

35gatekeeper-controller-manager-85498f495c-46ghm 1/1 Running 0 76s

36gatekeeper-controller-manager-85498f495c-8hwkz 1/1 Running 0 76s

37gatekeeper-controller-manager-85498f495c-xc8db 1/1 Running 0 76s

部署完成后,就可以使用下面2个案例测试效果了。😘

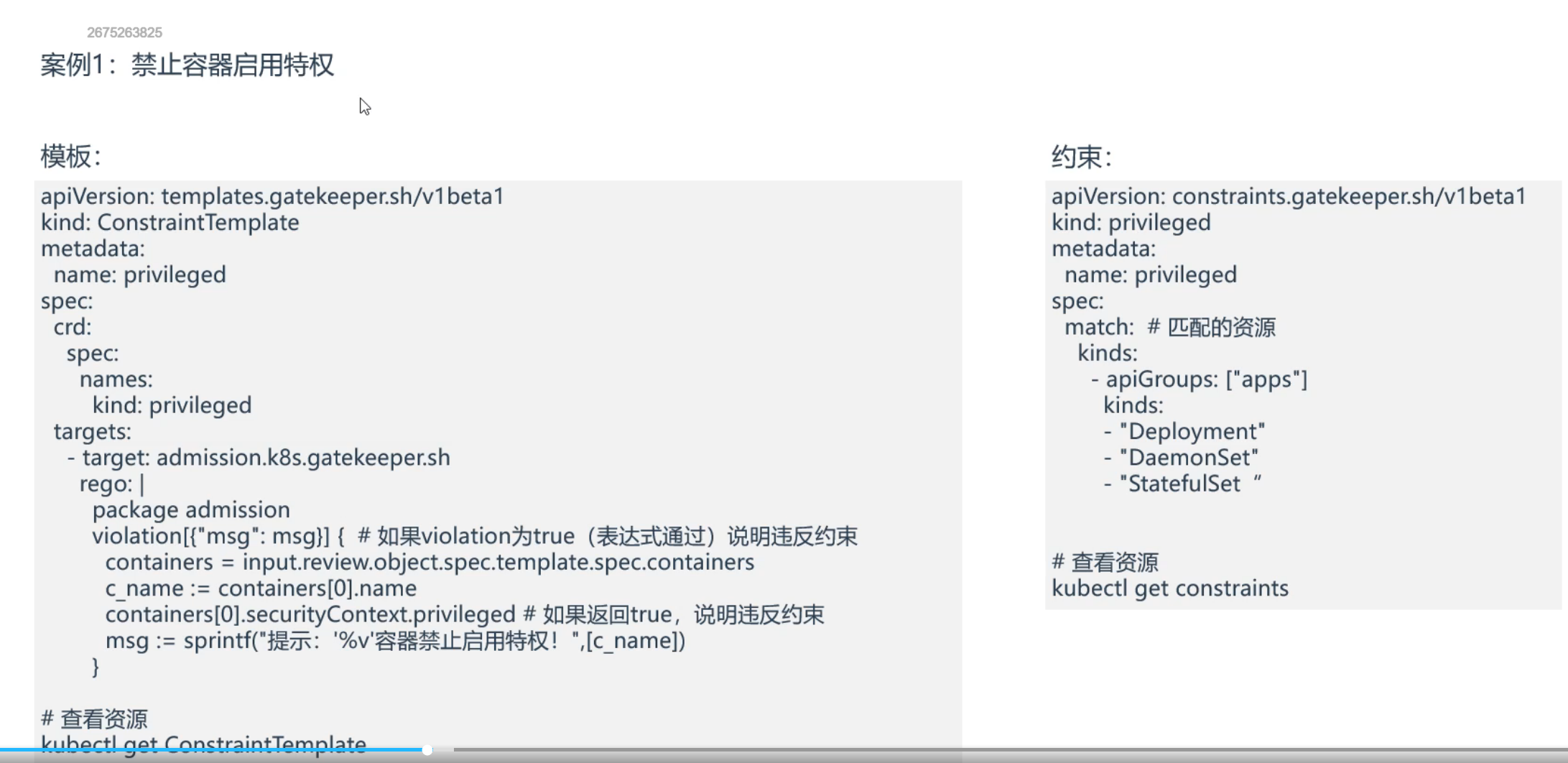

案例1:禁止容器启用特权

==💘 案例1:禁止容器启用特权-2023.6.1(测试成功)==

- 实验环境

1实验环境:

21、win10,vmwrokstation虚机;

32、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

4 k8s version:v1.20.0

5 docker://20.10.7

6 https://raw.githubusercontent.com/open-policy-agent/gatekeeper/release-3.7/deploy/gatekeeper.yaml

- 实验软件

1链接:https://pan.baidu.com/s/1rlJnp1MScKcC1G8UVaIikA?pwd=0820

2提取码:0820

32023.6.1-opa-code

已安装好Gatekeeper。

部署privileged_tpl.yaml和privileged_constraints.yaml

1[root@k8s-master1 opa]#vim privileged_tpl.yaml

2apiVersion: templates.gatekeeper.sh/v1

3kind: ConstraintTemplate

4metadata:

5 name: privileged

6spec:

7 crd:

8 spec:

9 names:

10 kind: privileged

11 targets:

12 - target: admission.k8s.gatekeeper.sh

13 rego: |

14 package admission

15

16 violation[{"msg": msg}] { #如果violation为true(表达式通过)说明违反约束

17 containers = input.review.object.spec.template.spec.containers

18 c_name := containers[0].name

19 containers[0].securityContext.privileged #如果返回true,说明违反约束

20 msg := sprintf("提示: '%v'容器禁止启用特权!", [c_name])

21 }

22

23

24

25[root@k8s-master1 opa]#vim privileged_constraints.yaml

26apiVersion: constraints.gatekeeper.sh/v1beta1

27kind: privileged

28metadata:

29 name: privileged

30spec:

31 match: #匹配的资源

32 kinds:

33 - apiGroups: ["apps"]

34 kinds:

35 - "Deployment"

36 - "DaemonSet"

37 - "StatefulSet"

38

39

40#部署

41[root@k8s-master1 opa]#kubectl apply -f privileged_tpl.yaml

42constrainttemplate.templates.gatekeeper.sh/privileged created

43[root@k8s-master1 opa]#kubectl apply -f privileged_constraints.yaml

44privileged.constraints.gatekeeper.sh/privileged created

45

46#查看资源

47[root@k8s-master1 opa]#kubectl get ConstraintTemplate

48NAME AGE

49privileged 71s

50[root@k8s-master1 opa]#kubectl get constraints

51NAME AGE

52privileged 2m9s

- 部署测试deployment

1[root@k8s-master1 opa]#kubectl create deployment web --image=nginx --dry-run=client -oyaml >deployment.yaml

2[root@k8s-master1 opa]#vim deployment.yaml

3apiVersion: apps/v1

4kind: Deployment

5metadata:

6 labels:

7 app: web

8 name: web61

9spec:

10 replicas: 1

11 selector:

12 matchLabels:

13 app: web

14 template:

15 metadata:

16 labels:

17 app: web

18 spec:

19 containers:

20 - image: nginx

21 name: nginx

22 securityContext:

23 privileged: true

24

25#部署

26[root@k8s-master1 opa]#kubectl apply -f deployment.yaml

27Error from server ([privileged] 提示: 'nginx'容器禁止启用特权!): error when creating "deployment.yaml": admission webhook "validation.gatekeeper.sh" denied the request: [privileged] 提示

28: 'nginx'容器禁止启用特权!

29#可以看到,部署时报错了,我们再来关闭privileged,再次部署,观察效果

30

31[root@k8s-master1 opa]#vim deployment.yaml

32apiVersion: apps/v1

33kind: Deployment

34metadata:

35 labels:

36 app: web

37 name: web61

38spec:

39 replicas: 1

40 selector:

41 matchLabels:

42 app: web

43 template:

44 metadata:

45 labels:

46 app: web

47 spec:

48 containers:

49 - image: nginx

50 name: nginx

51 #securityContext:

52 # privileged: true

53[root@k8s-master1 opa]#kubectl apply -f deployment.yaml

54deployment.apps/web61 created

55[root@k8s-master1 opa]#kubectl get deployment|grep web61

56web61 1/1 1 1 18s

57#关闭privileged后,就可以正常部署了

测试结束。😘

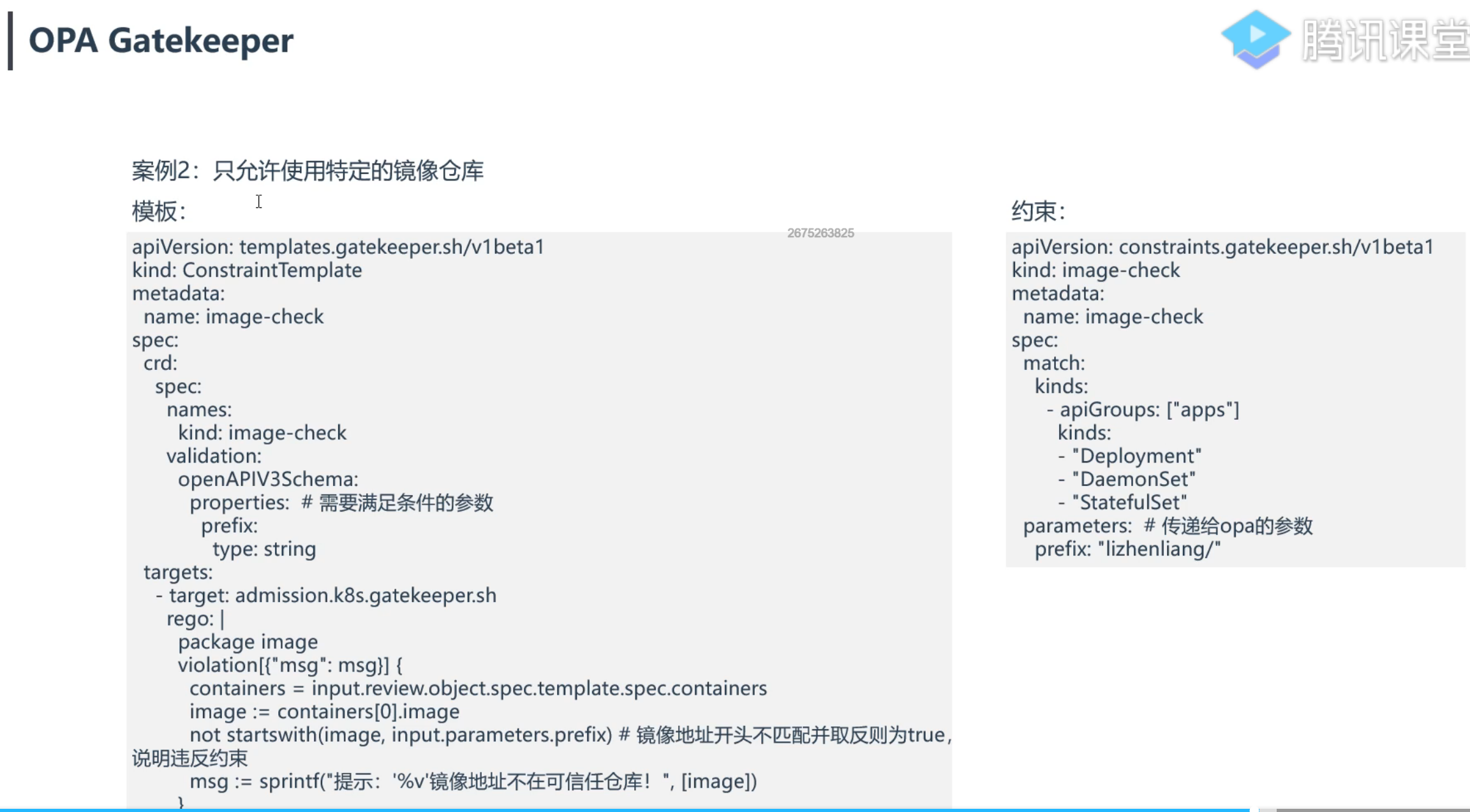

案例2:只允许使用特定的镜像仓库

==💘 案例2:只允许使用特定的镜像仓库-2023.6.1(测试成功)==

- 实验环境

1实验环境:

21、win10,vmwrokstation虚机;

32、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

4 k8s version:v1.20.0

5 docker://20.10.7

6 https://raw.githubusercontent.com/open-policy-agent/gatekeeper/release-3.7/deploy/gatekeeper.yaml

- 实验软件

1链接:https://pan.baidu.com/s/1rlJnp1MScKcC1G8UVaIikA?pwd=0820

2提取码:0820

32023.6.1-opa-code

已安装好Gatekeeper。

部署资源

1[root@k8s-master1 opa]#cp privileged_tpl.yaml image-check_tpl.yaml

2[root@k8s-master1 opa]#cp privileged_constraints.yaml image-check_constraints.yaml

3

4[root@k8s-master1 opa]#vim image-check_tpl.yaml

5apiVersion: templates.gatekeeper.sh/v1

6kind: ConstraintTemplate

7metadata:

8 name: image-check

9spec:

10 crd:

11 spec:

12 names:

13 kind: image-check

14 validation:

15 # Schema for the `parameters` field

16 openAPIV3Schema:

17 type: object

18 properties:

19 prefix:

20 type: string

21 targets:

22 - target: admission.k8s.gatekeeper.sh

23 rego: |

24 package image

25

26 violation[{"msg": msg}] {

27 containers = input.review.object.spec.template.spec.containers

28 image := containers[0].image

29 not startswith(image, input.parameters.prefix) #镜像地址开头不匹配并取反则为true,说明违反约束

30 msg := sprintf("提示: '%v'镜像地址不再可信任仓库", [image])

31 }

32

33

34[root@k8s-master1 opa]#vim image-check_constraints.yaml.yaml

35apiVersion: constraints.gatekeeper.sh/v1beta1

36kind: image-check

37metadata:

38 name: image-check

39spec:

40 match:

41 kinds:

42 - apiGroups: ["apps"]

43 kinds:

44 - "Deployment"

45 - "DaemonSet"

46 - "StatefulSet"

47 parameters: #传递给opa的参数

48 prefix: "lizhenliang/"

49

50

51#部署:

52[root@k8s-master1 opa]#kubectl apply -f image-check_tpl.yaml

53constrainttemplate.templates.gatekeeper.sh/image-check created

54[root@k8s-master1 opa]#kubectl apply -f image-check_constraints.yaml.yaml

55image-check.constraints.gatekeeper.sh/image-check created

56

57#查看

58[root@k8s-master1 opa]#kubectl get constrainttemplate

59NAME AGE

60image-check 71s

61privileged 59m

62[root@k8s-master1 opa]#kubectl get constraints

63NAME AGE

64image-check.constraints.gatekeeper.sh/image-check 70s

65

66NAME AGE

67privileged.constraints.gatekeeper.sh/privileged 59m

- 创建测试pod

1[root@k8s-master1 opa]#kubectl create deployment web666 --image=nginx

2error: failed to create deployment: admission webhook "validation.gatekeeper.sh" denied the request: [image-check] 提示: 'nginx'镜像地址不再可信任仓库

3[root@k8s-master1 opa]#kubectl create deployment web666 --image=lizhenliang/nginx

4deployment.apps/web666 created

符合预期。😘

4、Secret存储敏感数据

见其它md。

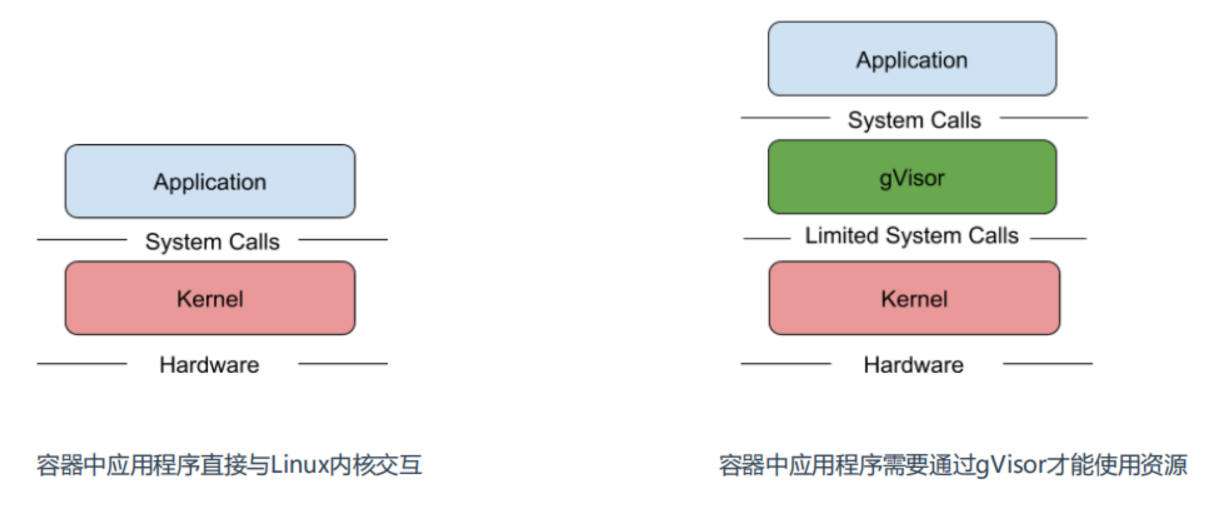

5、安全沙箱运行容器:gVisor介绍

gVisor介绍

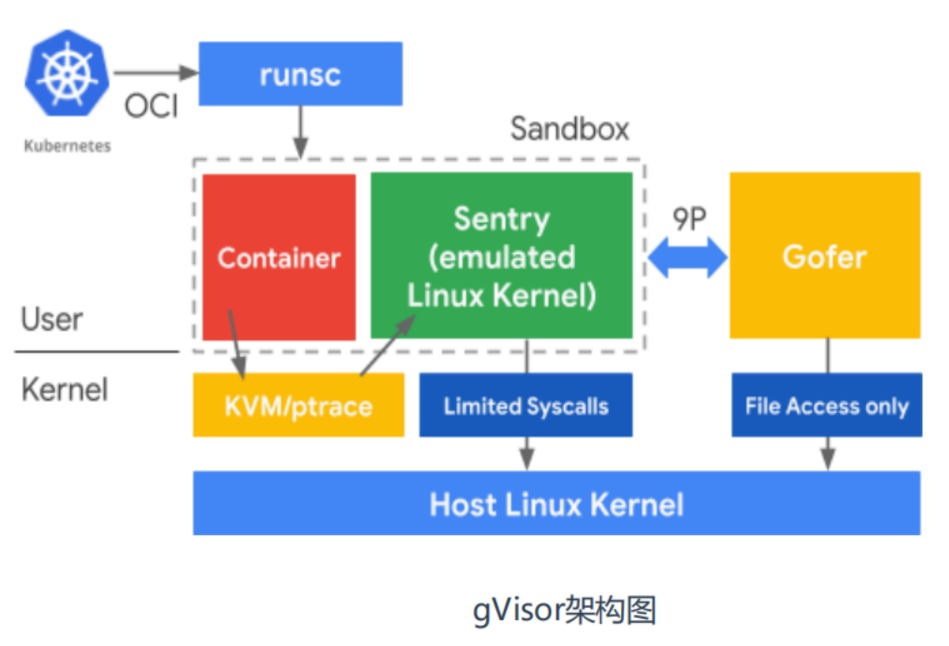

所知,容器的应用程序可以直接访问Linux内核的系统调用,容器在安全隔离上还是比较弱,虽然内核在不断地增强自身的安全特性,但由于内核自身代码极端复杂,CVE 漏洞层出不穷。所以要想减少这方面安全风险,就是做好安全隔离,阻断容器内程序对物理机内核的依赖。

Google开源的一种gVisor容器沙箱技术就是采用这种思路,gVisor隔离容器内应用和内核之间访问,提供了大部分Linux内核的系统调用,巧妙的将容器内进程的系统调用转化为对gVisor的访问。

gVisor兼容OCI,与Docker和K8s无缝集成,很方面使用。

项目地址:https://github.com/google/gvisor

gVisor架构

gVisor 由 3 个组件构成: • Runsc 是一种 Runtime 引擎,负责容器的创建与销毁。 • Sentry 负责容器内程序的系统调用处理。 • Gofer 负责文件系统的操作代理,IO 请求都会由它转接到 Host 上。

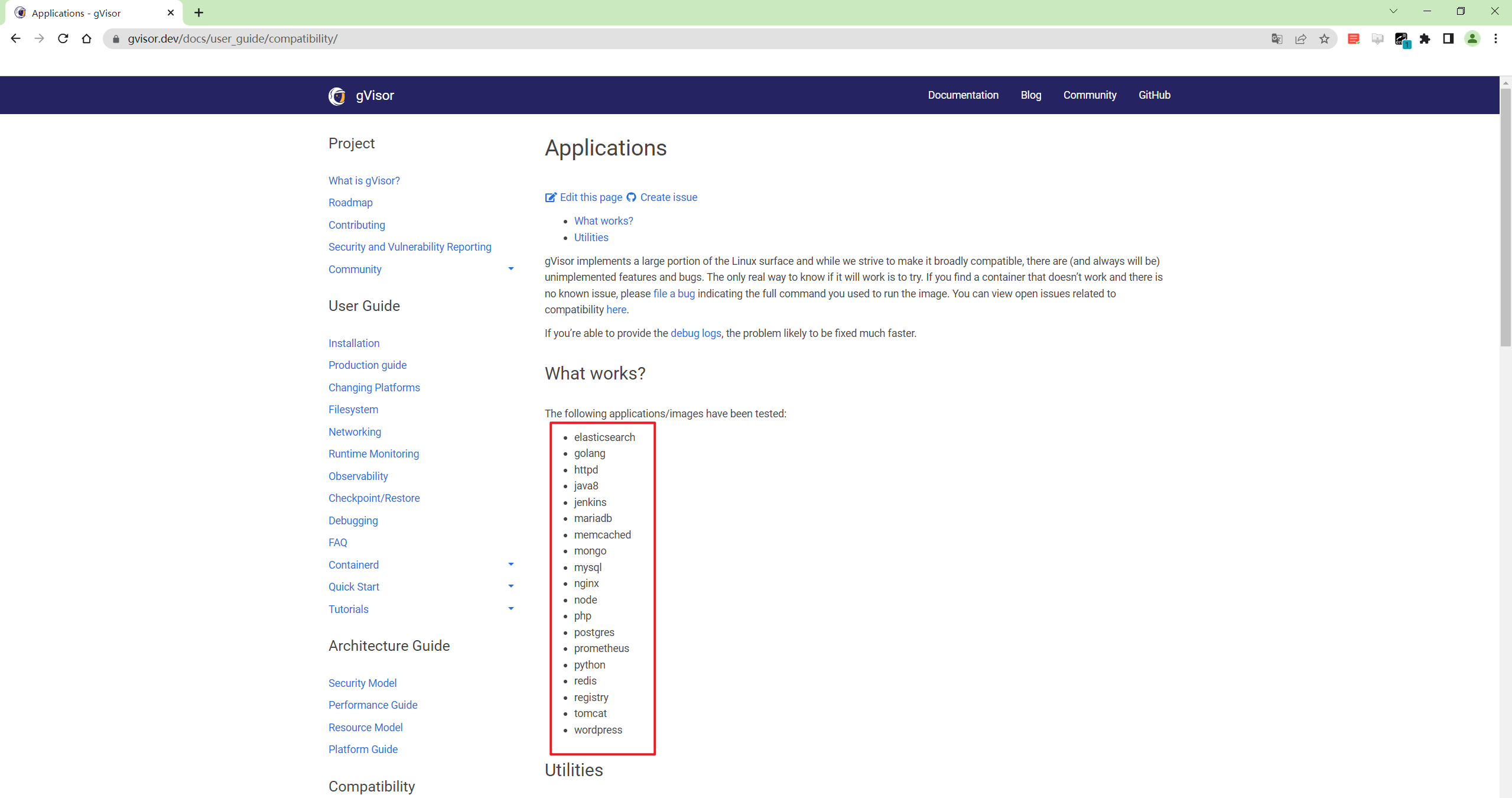

- 已经测试过的应用和工具

https://gvisor.dev/docs/user_guide/compatibility/

案例1:gVisor与Docker集成

==💘 实战:gVisor与Docker集成-2023.6.2(测试成功)==

- 实验环境

1实验环境:

21、win10,vmwrokstation虚机;

32、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

4 k8s version:v1.20.0

5 docker://20.10.7

- 实验软件

1链接:https://pan.baidu.com/s/1xsLYDDsdonQzFUwWCPCdhg?pwd=0820

2提取码:0820

32023.6.2-gvisor

- 基础环境

升级内核

gVisor内核要求:Linux 3.17+

如果用的是CentOS7则需要升级内核,Ubuntu不需要。

CentOS7内核升级步骤:

1#查看当前内核

2[root@k8s-node1 ~]#uname -r

33.10.0-957.el7.x86_64

4

5#升级内核

6rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

7rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

8yum --enablerepo=elrepo-kernel install kernel-ml-devel kernel-ml –y

9grub2-set-default 0

10reboot

11

12#确认是否升级成功

13[root@k8s-node1 ~]#uname -r

146.3.5-1.el7.elrepo.x86_64

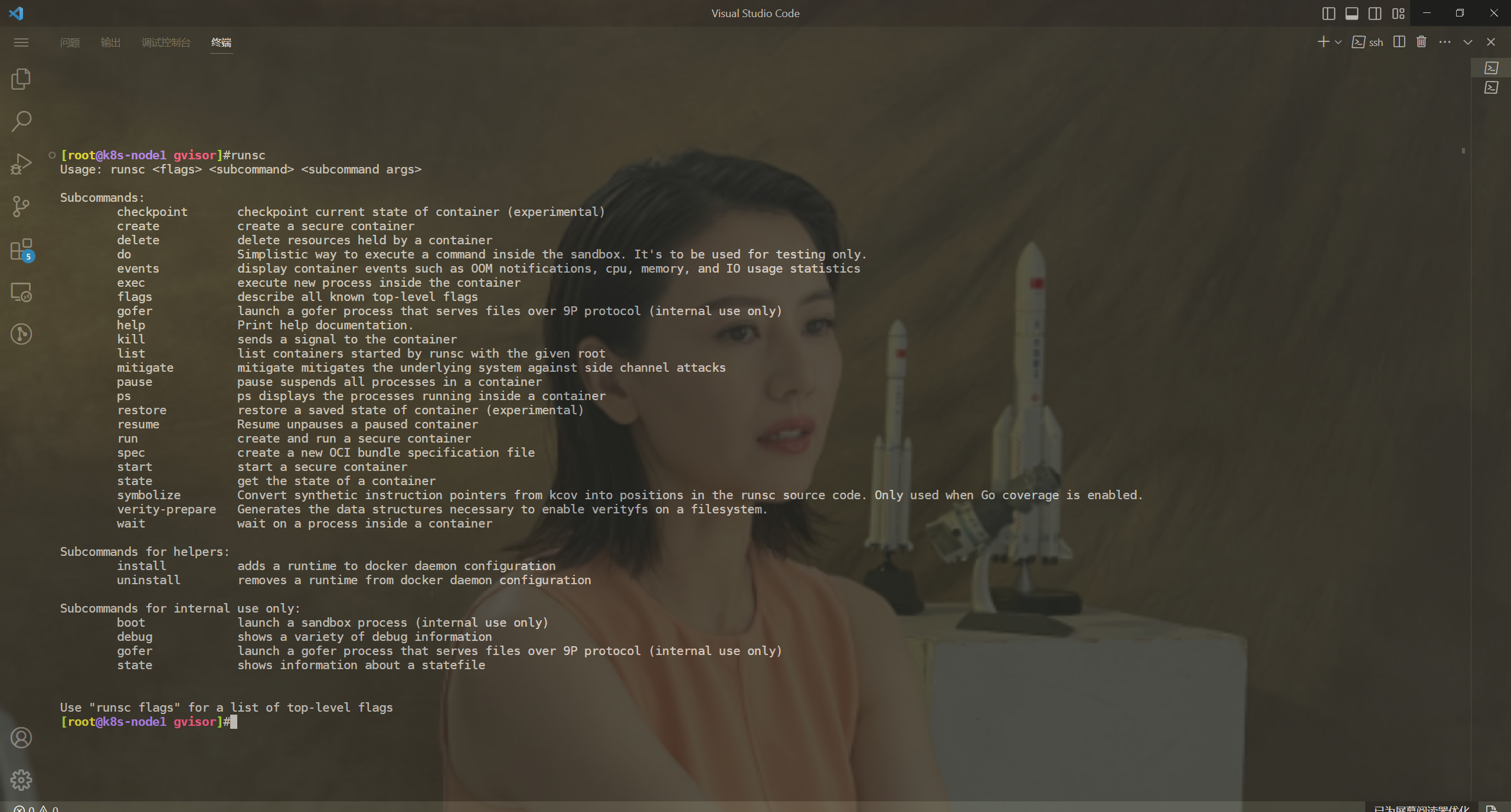

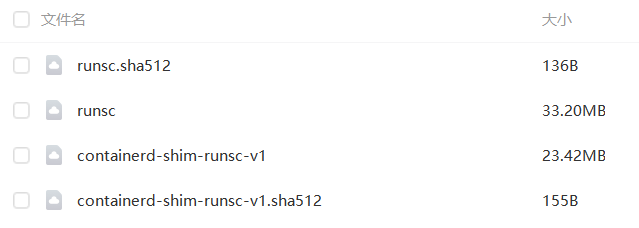

1、准备gVisor二进制文件

1#这里使用提供的软件包就好

2[root@k8s-node1 ~]#mkdir gvisor

3[root@k8s-node1 ~]#cd gvisor/

4[root@k8s-node1 gvisor]#unzip gvisor.zip

5Archive: gvisor.zip

6 inflating: runsc.sha512

7 inflating: runsc

8 inflating: containerd-shim-runsc-v1

9 inflating: containerd-shim-runsc-v1.sha512

10

11[root@k8s-node1 gvisor]#chmod +x containerd-shim-runsc-v1 runsc

12[root@k8s-node1 gvisor]#mv containerd-shim-runsc-v1 runsc /usr/local/bin/

13

14#验证

15[root@k8s-node1 gvisor]#runsc

16Usage: runsc <flags> <subcommand> <subcommand args>

17

18Subcommands:

19 checkpoint checkpoint current state of container (experimental)

20 create create a secure container

21 delete delete resources held by a container

22 do Simplistic way to execute a command inside the sandbox. It's to be used for testing only.

23 events display container events such as OOM notifications, cpu, memory, and IO usage statistics

24 exec execute new process inside the container

25……

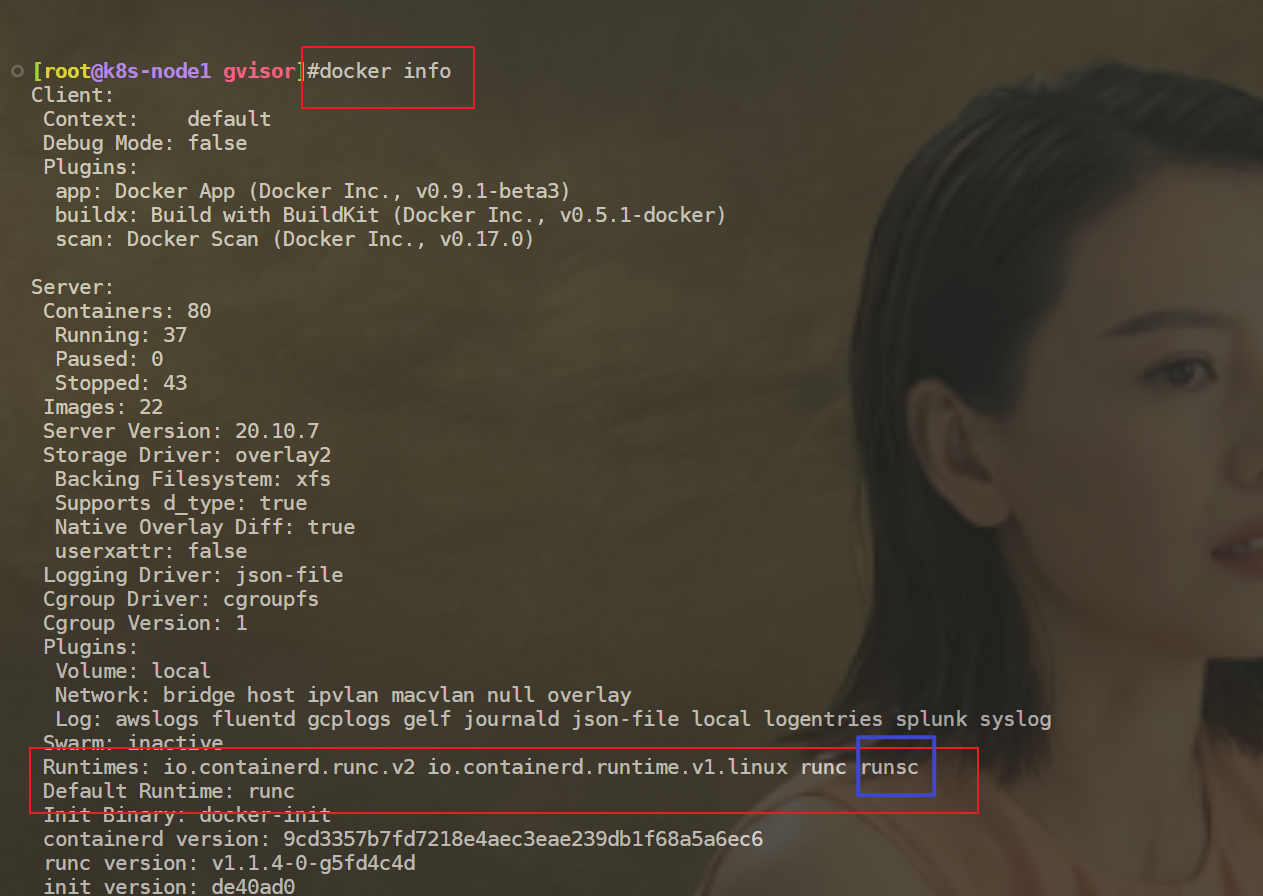

2、Docker配置使用gVisor

1[root@k8s-node1 gvisor]#cat /etc/docker/daemon.json

2{

3 "registry-mirrors":["https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com","http://qtid6917.mirror.aliyuncs.com"]

4}

5[root@k8s-node1 gvisor]#runsc install

62023/06/02 10:21:25 Added runtime "runsc" with arguments [] to "/etc/docker/daemon.json".

7[root@k8s-node1 gvisor]#cat /etc/docker/daemon.json

8{

9 "registry-mirrors": [

10 "https://dockerhub.azk8s.cn",

11 "http://hub-mirror.c.163.com",

12 "http://qtid6917.mirror.aliyuncs.com"

13 ],

14 "runtimes": {

15 "runsc": {

16 "path": "/usr/local/bin/runsc"

17 }

18 }

19}

20[root@k8s-node1 gvisor]#systemctl restart docker

3、验证

1docker info #可以看到,容器运行时这里会多了一个runsc

使用gvisor运行容器:

1[root@k8s-node1 gvisor]#docker run -d --name=web-normal nginx

2f826403374fa703f9715bfa218ea405bbbbf0cf765ac72defc7f94934cb7e0cf

3[root@k8s-node1 gvisor]#docker run -d --name=web-gvisor --runtime=runsc nginx

4c1eb1405fd9a09db41525ac68061f8c324dd6a3e5ae9d90828c7d257d3ab3294

5

6[root@k8s-node1 gvisor]#docker exec web-normal uname -r

76.3.5-1.el7.elrepo.x86_64

8[root@k8s-node1 gvisor]#docker exec web-gvisor uname -r

94.4.0

10

11[root@k8s-node1 gvisor]#uname -r

126.3.5-1.el7.elrepo.x86_64

13#可以看大使用runsc创建的容器内核是4.4.0,而默认使用runc创建的容器内核使用的是宿主机内核6.3.5-1.

14

15#另外,也可使用如下命令来查看log

16[root@k8s-node1 gvisor]#docker run --runtime=runsc nginx dmesg

17[ 0.000000] Starting gVisor...

18[ 0.130598] Searching for socket adapter...

19[ 0.134223] Granting licence to kill(2)...

20[ 0.500262] Digging up root...

21[ 0.952825] Reticulating splines...

22[ 1.112800] Waiting for children...

23[ 1.355414] Committing treasure map to memory...

24[ 1.740439] Conjuring /dev/null black hole...

25[ 2.014772] Gathering forks...

26[ 2.079167] Feeding the init monster...

27[ 2.452438] Generating random numbers by fair dice roll...

28[ 2.566791] Ready!

29[root@k8s-node1 gvisor]

测试结束。😘

- 参考文档

参考文档:https://gvisor.dev/docs/user_guide/install/

案例2:gVisor与Containerd集成

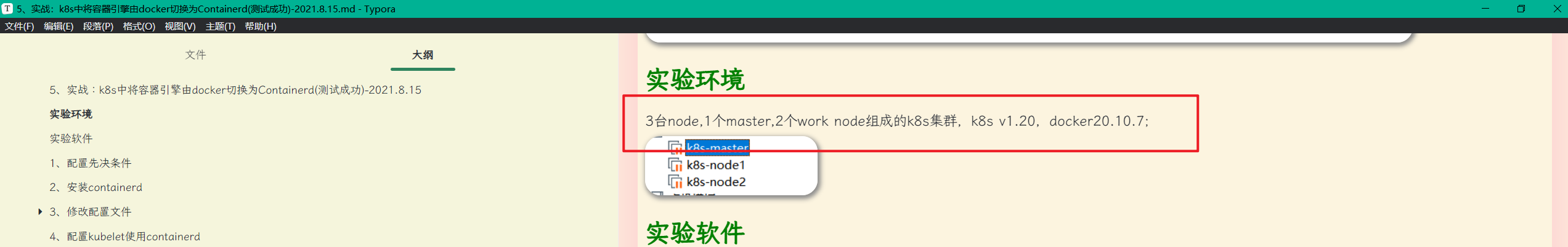

==💘 实战:gVisor与Containerd集成-2023.6.2(测试成功)==

- 实验环境

1实验环境:

21、win10,vmwrokstation虚机;

32、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

4 k8s version:v1.20.0

5 docker://20.10.7

6 containerd://1.4.11

- 实验软件

1链接:https://pan.baidu.com/s/1ekAmnKS_iLjaGgNFxjZoXg?pwd=0820

2提取码:0820

32023.6.3-gVisor与Containerd集成-code

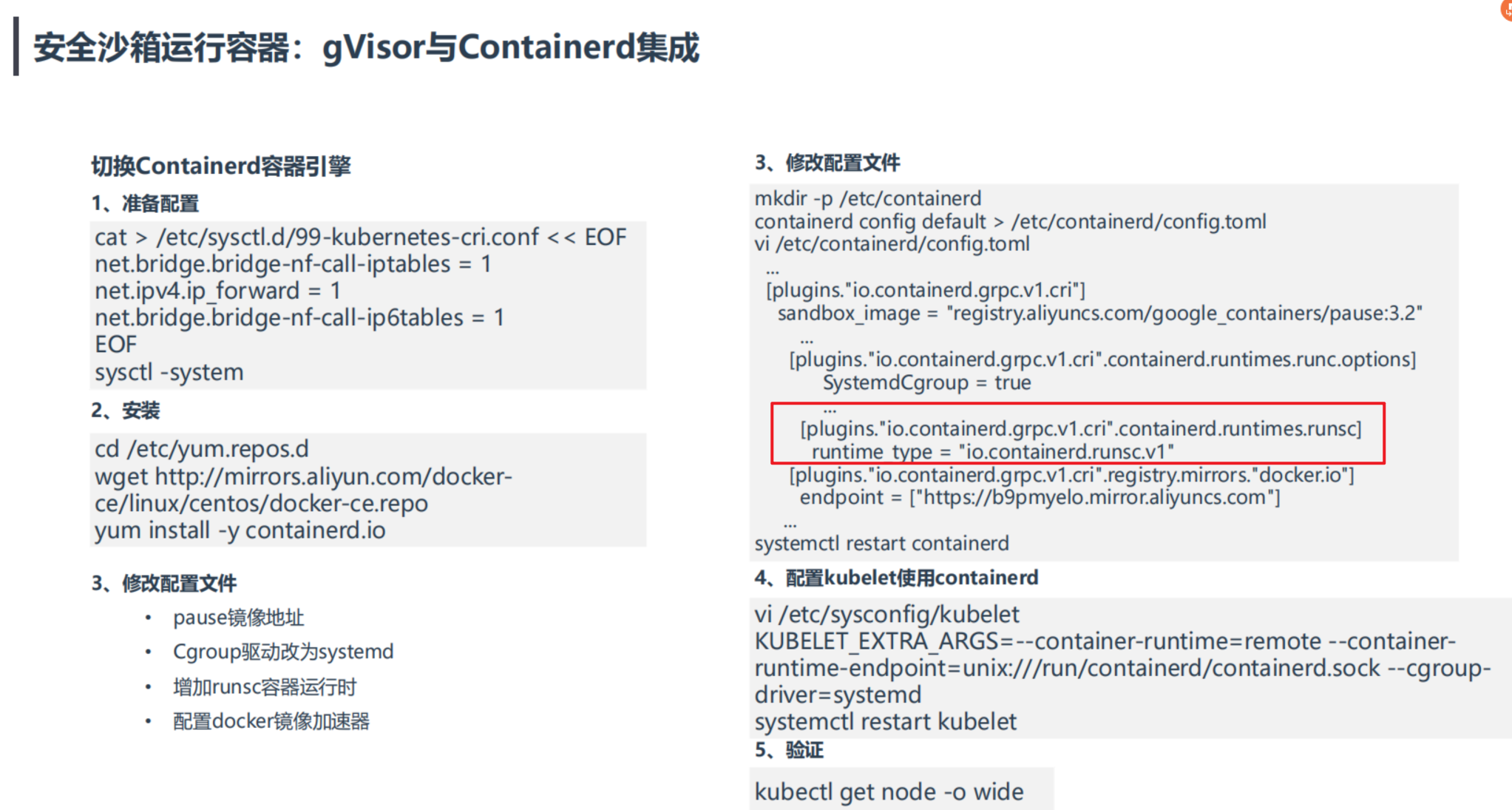

这里是k8s(docker环境)其中一个宿主机切换到Containerd容器引擎方法:

这里的步骤和之前的文档一样,只是/etc/containerd/config.toml里面多了一个runsc的配置,其余都一样。

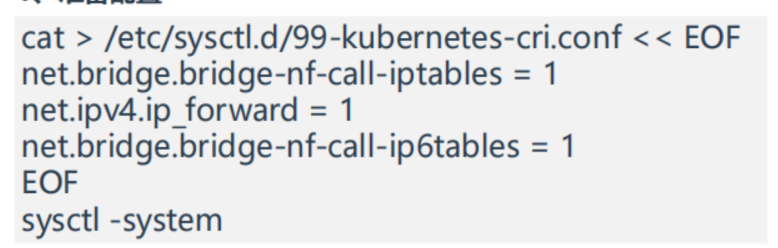

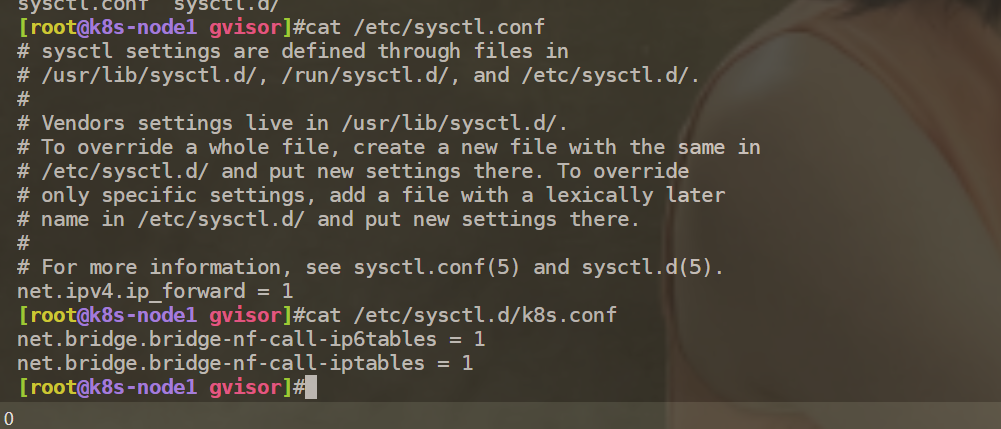

1、准备配置

这一部分在k8s安装时已经配置了的:(这里省略)

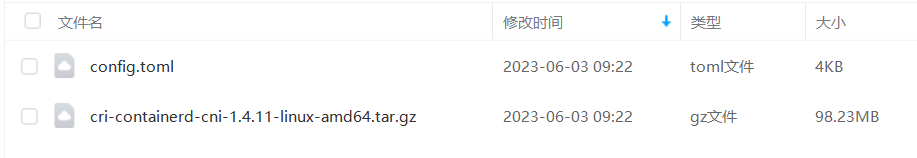

2、安装

本次安装cri-containerd-cni-1.4.11-linux-amd64.tar.gz https://github.com/containerd/containerd/releases/tag/v1.4.11

1tar -C / -xzf cri-containerd-cni-1.4.11-linux-amd64.tar.gz

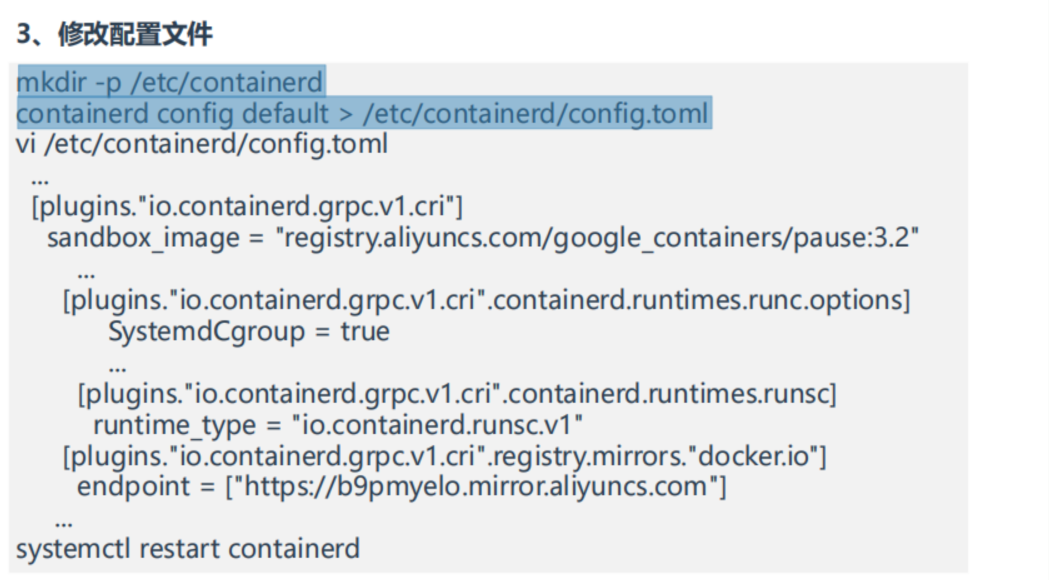

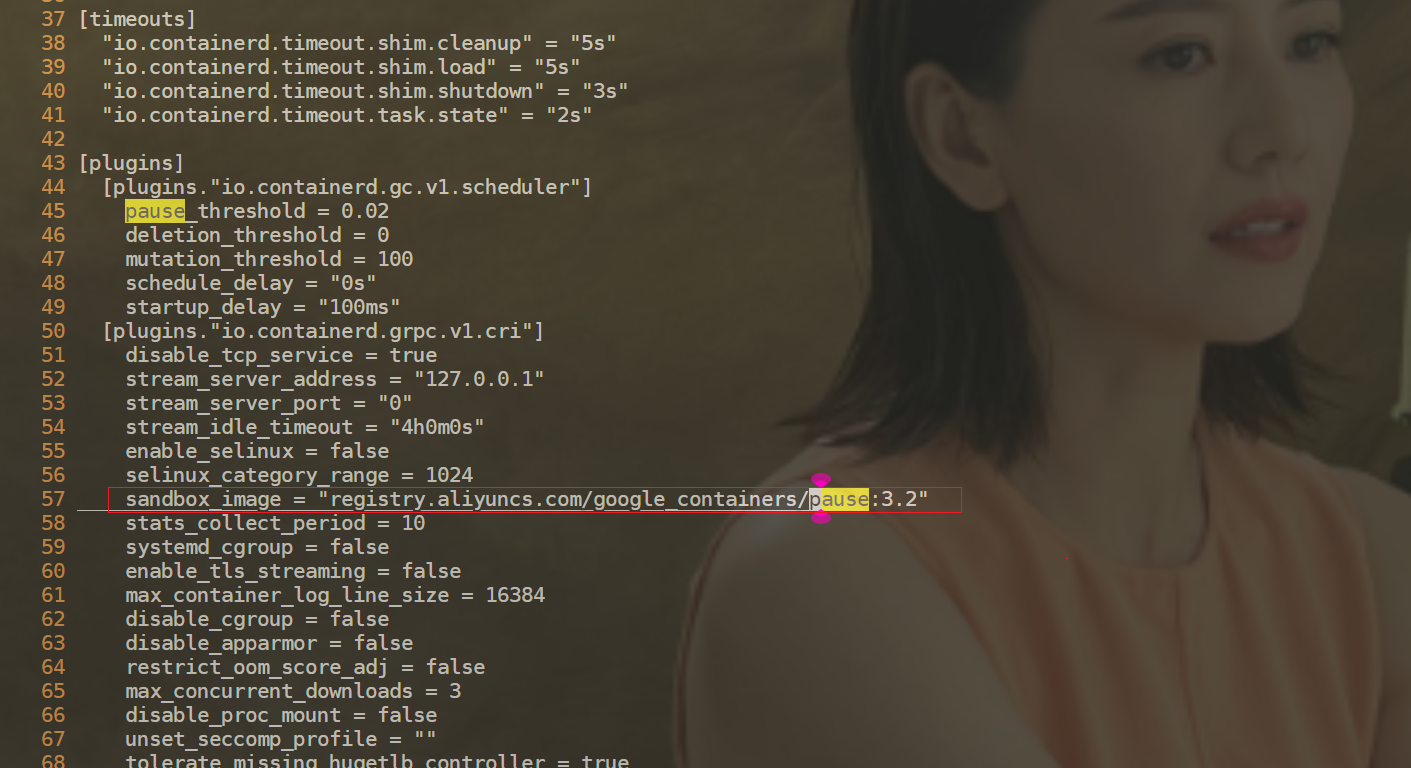

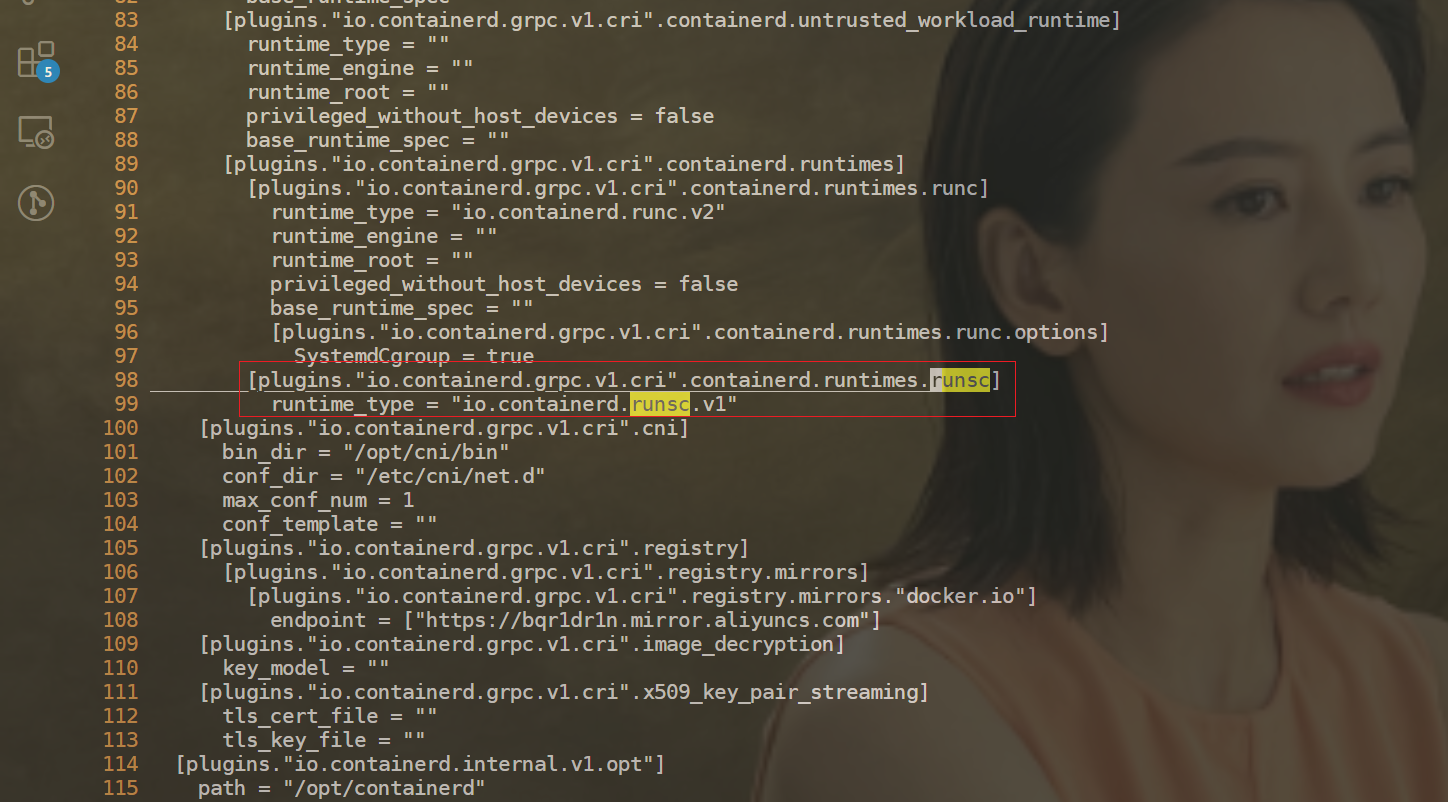

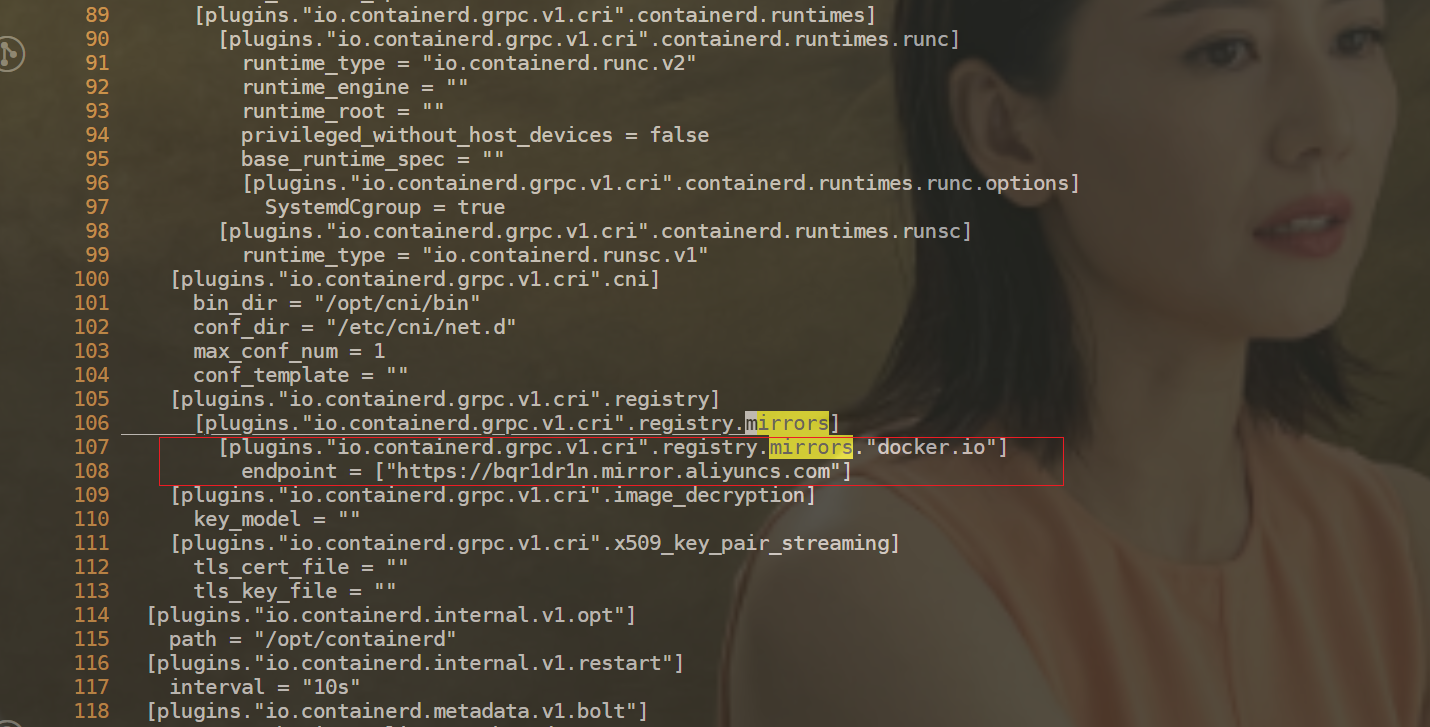

3、修改配置文件 • pause镜像地址 • Cgroup驱动改为systemd • 增加runsc容器运行时 • 配置docker镜像加速器

1mkdir -p /etc/containerd

2containerd config default > /etc/containerd/config.toml

3vim /etc/containerd/config.toml

1vi /etc/containerd/config.toml

2...

3[plugins."io.containerd.grpc.v1.cri"]

4sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.2"

5...

6[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

7SystemdCgroup = true

8...

9[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runsc]

10 runtime_type = "io.containerd.runsc.v1"

11[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

12endpoint = ["https://b9pmyelo.mirror.aliyuncs.com"]

13...

1#关闭docker

2[root@k8s-node1 gvisor]#systemctl stop docker

3Warning: Stopping docker.service, but it can still be activated by:

4 docker.socket

5[root@k8s-node1 gvisor]#systemctl stop docker.socket

6[root@k8s-node1 gvisor]#docker info

7Client:

8 Context: default

9 Debug Mode: false

10 Plugins:

11 app: Docker App (Docker Inc., v0.9.1-beta3)

12 buildx: Build with BuildKit (Docker Inc., v0.5.1-docker)

13 scan: Docker Scan (Docker Inc., v0.17.0)

14

15Server:

16ERROR: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

17errors pretty printing info

18[root@k8s-node1 gvisor]#ps -ef|grep docker

19root 35418 26329 0 11:19 pts/0 00:00:00 grep --color=auto docker

20

21[root@k8s-node1 ~]#containerd -v

22containerd github.com/containerd/containerd v1.6.10 770bd0108c32f3fb5c73ae1264f7e503fe7b2661

23[root@k8s-node1 ~]#runc -v

24runc version 1.1.4

25commit: v1.1.4-0-g5fd4c4d1

26spec: 1.0.2-dev

27go: go1.18.8

28libseccomp: 2.5.1

29

30

31#重启containerd

32[root@k8s-node1 gvisor]#systemctl restart containerd

4、配置kubelet使用containerd

1[root@k8s-node1 gvisor]#vi /etc/sysconfig/kubelet

2将

3 KUBELET_EXTRA_ARGS=

4 替换为

5 KUBELET_EXTRA_ARGS=--container-runtime=remote --container-runtime-endpoint=unix:///run/containerd/containerd.sock --cgroup-driver=systemd

6[root@k8s-node1 gvisor]#systemctl restart kubelet

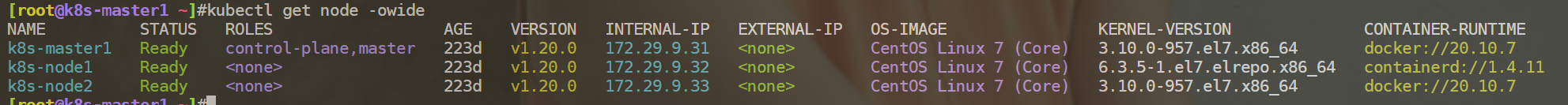

5、验证

目前切换,不是平滑的切换。 上面的pod会被重建的。

配置完成。😘

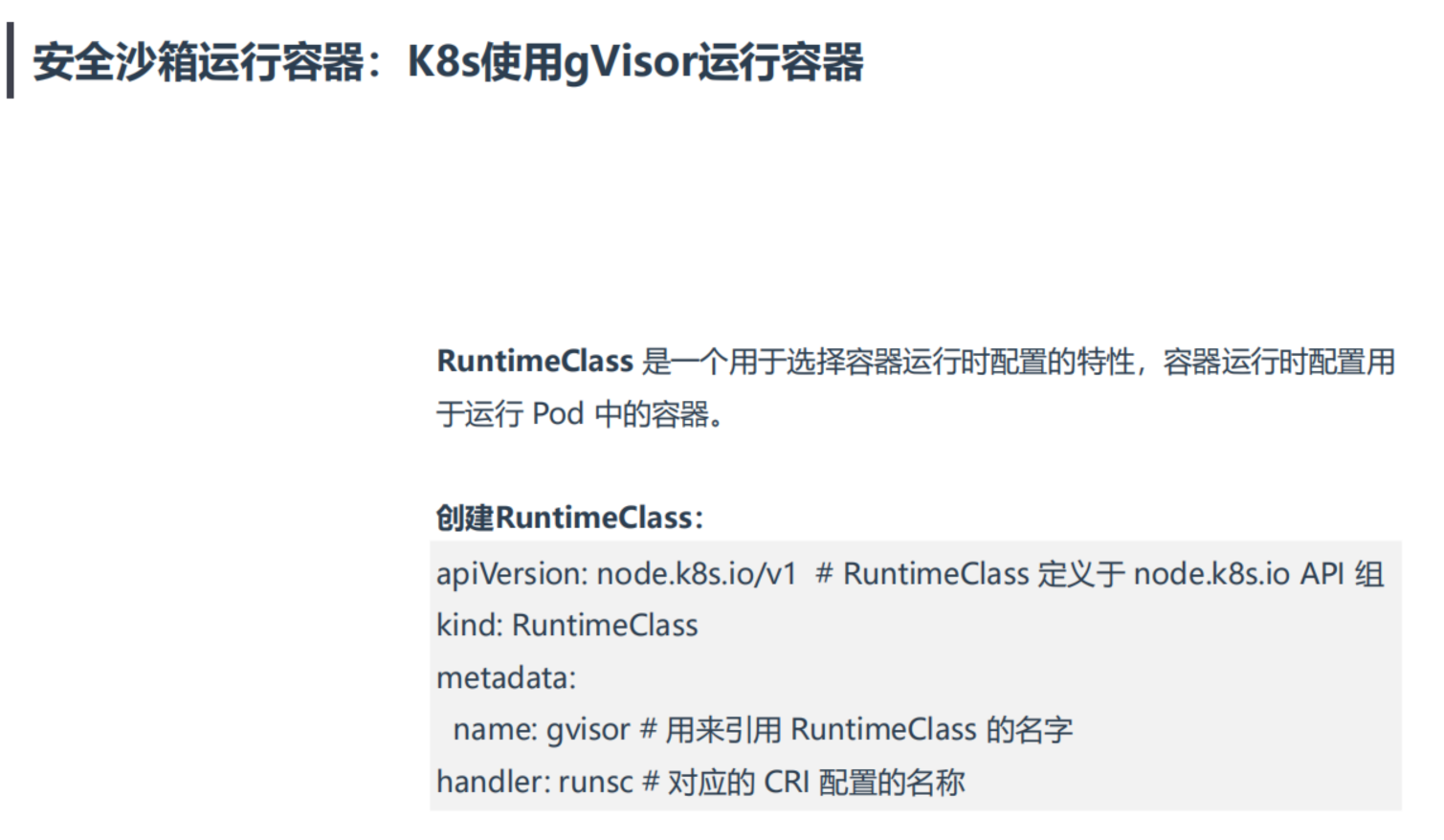

案例3:K8s使用gVisor运行容器

RuntimeClass 是一个用于选择容器运行时配置的特性,容器运行时配置用于运行 Pod 中的容器。

==💘 实战:K8s使用gVisor运行容器-2023.6.3(测试成功)==

- 实验环境

1实验环境:

21、win10,vmwrokstation虚机;

32、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

4 k8s version:v1.20.0

5 docker://20.10.7

- 实验软件

1链接:https://pan.baidu.com/s/1kmPpRWyWsvk0k8FM2jjdJw?pwd=0820

2提取码:0820

32023.6.3-K8s使用gVisor运行容器-code

1、创建RuntimeClass

1[root@k8s-master1 ~]#mkdir k8s-gvisor

2[root@k8s-master1 ~]#cd k8s-gvisor/

3[root@k8s-master1 k8s-gvisor]#vim runtimeclass.yaml

4apiVersion: node.k8s.io/v1 # RuntimeClass 定义于 node.k8s.io API 组

5kind: RuntimeClass

6metadata:

7 name: gvisor # 用来引用 RuntimeClass 的名字

8handler: runsc # 对应的 CRI 配置的名称

9

10#部署

11[root@k8s-master1 k8s-gvisor]#kubectl apply -f runtimeclass.yaml

12runtimeclass.node.k8s.io/gvisor created

13[root@k8s-master1 k8s-gvisor]#kubectl get runtimeclasses

14NAME HANDLER AGE

15gvisor runsc 13s

2、创建Pod测试gVisor

1[root@k8s-master1 k8s-gvisor]#vim pod.yaml

2apiVersion: v1

3kind: Pod

4metadata:

5 name: nginx-gvisor

6spec:

7 runtimeClassName: gvisor

8 nodeName: "k8s-node1" #注意:这里要加上nodeName,要保证能调度到开启了runsc容器运行时的节点!

9 containers:

10 - name: nginx

11 image: nginx

12

13

14#部署

15[root@k8s-master1 k8s-gvisor]#kubectl apply -f pod.yaml

16pod/nginx-gvisor created

3、验证

1[root@k8s-master1 k8s-gvisor]#kubectl get po -owide

2NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

3nginx-gvisor 1/1 Running 0 11m 10.244.36.101 k8s-node1 <none> <none>

4[root@k8s-master1 k8s-gvisor]#kubectl get node -owide

5NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

6k8s-master1 Ready control-plane,master 223d v1.20.0 172.29.9.31 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.7

7k8s-node1 Ready <none> 223d v1.20.0 172.29.9.32 <none> CentOS Linux 7 (Core) 6.3.5-1.el7.elrepo.x86_64 containerd://1.4.11

8k8s-node2 Ready <none> 223d v1.20.0 172.29.9.33 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.7

9

10[root@k8s-master1 k8s-gvisor]#kubectl exec nginx-gvisor -- dmesg

11[ 0.000000] Starting gVisor...

12[ 0.281926] Creating bureaucratic processes...

13[ 0.750139] Mounting deweydecimalfs...

14[ 0.931675] Forking spaghetti code...

15[ 1.122117] Creating cloned children...

16[ 1.339021] Recruiting cron-ies...

17[ 1.589506] Adversarially training Redcode AI...

18[ 1.615452] Consulting tar man page...

19[ 1.899674] Waiting for children...

20[ 2.110548] Committing treasure map to memory...

21[ 2.488117] Feeding the init monster...

22[ 2.746305] Ready!

符合预期,可以看到次pod使用乐然gvisor作为容器运行时。

测试结束。😘

FAQ

汇总

多容器运行时

关于我

我的博客主旨:

- 排版美观,语言精炼;

- 文档即手册,步骤明细,拒绝埋坑,提供源码;

- 本人实战文档都是亲测成功的,各位小伙伴在实际操作过程中如有什么疑问,可随时联系本人帮您解决问题,让我们一起进步!

🍀 微信二维码 x2675263825 (舍得), qq:2675263825。

🍀 微信公众号 《云原生架构师实战》

🍀 语雀

https://www.yuque.com/xyy-onlyone

🍀 csdn https://blog.csdn.net/weixin_39246554?spm=1010.2135.3001.5421

🍀 知乎 https://www.zhihu.com/people/foryouone

最后

好了,关于本次就到这里了,感谢大家阅读,最后祝大家生活快乐,每天都过的有意义哦,我们下期见!